hadoop+spark+hive

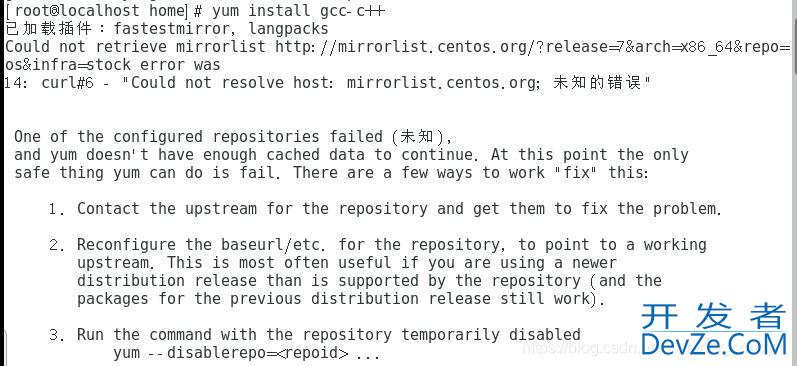

启动pyspark终端,提示报错

ERROR ObjectStore: Version information found in metastore differs 2.1.0 from expected schema version 1.2.0. Schema verififcation is disabled hive.metastore.schema.verification so setting version.

解决方法:

hive配置

编辑 $HIVE_HOME/conf/hive-site.xml,增加如下内容:

<property><name>hive.metastore.uris</name><value>thrift://master:9083</value><description>Thrift uri for the remote metastore. Used by metastore client to connect to remote metastore.</description></property>

启动hive metastore

启动 metastore:

$hive --service metastore & 查看 metastore: $jobs [1]+ Running hive --service metastore &关闭 metastore:

$kill %1

kill %jobid,1代表job id

spark配置

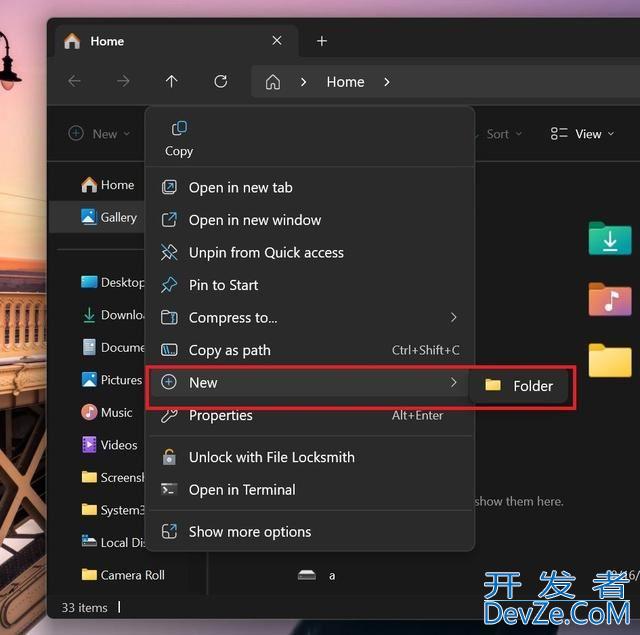

将 $HIVE_HOME/conf/hive-site.xml copy或者软链 到 $SPARK_HOME/conf/

重新启动pyspark 报错消失

加载中,请稍侯......

加载中,请稍侯......

精彩评论