目录

- 一、MP4Writer类

- 二、写h264流数据为mp4文件步骤

- 三、写h265流数据为mp4文件步骤

- 四、利用命令行生成mp4文件

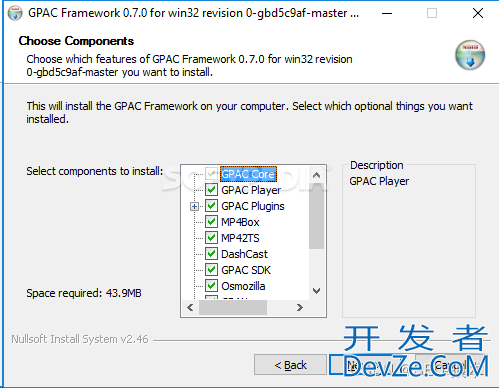

GPAC主要针对学生和内容创作者,代表了一个跨平台的多媒体框架,开发人员可以使用它在 LGPL 许可下制作开源媒体。GPAC多媒体框架兼容范围广泛的流行文件类型,从常见格式(如 AVI、MPEG 和 MOV)到复杂格式(如 MPEG-4 系统或 VRML/X3D)和 360 电影。

一、MP4Writer类

MP4Writer.h文件

#ifndef _MP4WRITER_H_

#define _MP4WRITER_H_

#define GPAC_MP4BOX_MINI

#include "gpac/setup.h"

#define MP4_AUDIO_TYPE_INVALID 0

#define MP4_AUDIO_TYPE_AAC_MAIN 1

#define MP4_AUDIO_TYPE_AAC_LC 2

#define MP4_AUDIO_TYPE_AAC_SSR 3

#define MP4_AUDIO_TYPE_AAC_LD 23

#ifdef __cplusplus

extern "C" {

#endif

void* MP4_Init();

s32 MP4_CreatFile(void *pCMP4Writer, char *strFileName);

s32 MP4_InitVideo265(void *pCMP4Writer, u32 TimeScale);

s32 MP4_Write265Sample(void *pCMP4Writer, u8 *pData, u32 Size, u64 TimeStamp);

s32 MP4_InitVideo264(void *pCMP4Writer, u32 TimeScale);

s32 MP4_Write264Sample(void *pCMP4Writer, u8 *pData, u32 Size, u64 TimeStamp);

s32 MP4_InitAudioAAC(void *pCMP4Writer, u8 AudioType, u32 SampleRate, u8 Channel, u32 TimeScale);

s32 MP4_WriteAACSample(void *pCMP4Writer, u8 *pData, u32 Size, u64 TimeStamp);

void MP4_CloseFile(void *pCMP4Writer);

void MP4_Exit(void *pCMP4Writer);

#ifdef __cplusplus

}

#endif

#endif

MP4Writer.cpp文件

#include "MP4Writer.h"

#include <Winsock2.h>

extern "C" {

#include "gpac/isomedia.h"

#include "gpac/constants.h"

#include "gpac/internal/media_dev.h"

}

#define INIT_STATUS 0

#define CONFIG_STATUS 1

#define CONFIG_FINISH 2

static s8 GetSampleRateID(u32 SamplRate)

{

switch (SamplRate)

{

case 96000: return 0;

case 88200: return 1;

case 64000: return 2;

case 48000: return 3;

case 44100: return 4;

case 32000: return 5;

case 24000: return 6;

case 22050: return 7;

case 16000: return 8;

case 12000: return 9;

case 11025: return 10;

case 8000 : return 11;

case 7350 : return 12;

default: return -1;

}

}

//gf_m4a_get_profile

static u8 GetAACProfile(u8 AudioType, u32 SampleRate, u8 Channel)

{

switch (AudioType)

{

case 2: /* AAC LC */

{

if (Channel <= 2) return (SampleRate <= 24000) ? 0x28 : 0x29; /* LC@L1 or LC@L2 */

if (Channel <= 5) return (SampleRate <= 48000) ? 0x2A : 0x2B; /* LC@L4 or LC@L5 */

return (SampleRate <= 48000) ? 0x50 : 0x51; /* LC@L4 or LC@L5 */

}

case 5: /* HE-AAC - SBR */

{

if (Channel <= 2) return (SampleRate <= 24000) ? 0x2C : 0x2D; /* HE@L2 or HE@L3 */

if (Channel <= 5) return (SampleRate javascript<= 48000) ? 0x2E : 0x2F; /* HE@L4 or HE@L5 */

return (SampleRate <= 48000) ? 0x52 : 0x53; /* HE@L6 or HE@L7 */

}

case 29: /*HE-AACv2 - SBR+PS*/

{

if (Channel <= 2) return (SampleRate <= 24000) ? 0x30 : 0x31; /* HE-AACv2@L2 or HE-AACv2@L3 */

if (Channel <= 5) return (SampleRate <= 48000) ? 0x32 : 0x33; /* HE-AACv2@L4 or HE-AACv2@L5 */

return (SampleRate <= 48000) ? 0x54 : 0x55; /* HE-AACv2@L6 or HE-AACv2@L7 */

}

default: /* default to HQ */

{

if (Channel <= 2) return (SampleRate < 24000) ? 0x0E : 0x0F; /* HQ@L1 or HQ@L2 */

return 0x10; /* HQ@L3 */

}

}

}

static void GetAudIOSpecificConfig(u8 AudioType, u8 SampleRateID, u8 Channel, u8 *pHigh, u8 *pLow)

{

u16 Config;

Config = (AudioType & 0x1f);

Config <<= 4;

Config |= SampleRateID & 0x0f;

Config <<= 4;

Config |= Channel & 0x0f;

Config <<= 3;

*pLow = Config & 0xff;

Config >>= 8;

*pHigh = Config & 0xff;

}

/* 返回的数据包括起始的4个字节0x00000001 */

static u8* FindNalu(u8 *pStart, u32 Size, u8 *pNaluType, u32 *pNaluSize)

{

u8 *pEnd;

u8 *pCur;

u8 *pOut;

u8 NaluType;

if (4 >= Size)

return NULL;

/* 找第一个0x00000001 */

pCur = pStart;

pEnd = pStart + Size - 4;

while (pCur < pEnd)

{

if ( (0 == pCur[0]) && (0 == pCur[1]) && (0 == pCur[2]) && (1 == pCur[3]) )

break;

pCur++;

}

if (pCur >= pEnd)

return NULL;

NaluType = (pCur[4] >> 1) & 0x3f;

*pNaluType = NaluType;

if (1 == NaluType || 19 == NaluType) /* P帧、I帧, 假设每一包里P帧I帧都是最后一个 */

{

*pNaluSize = Size - (pCur - pStart);

return pCur;

}

pOut = pCur;

/* 找第二个0x00000001 */

pCur += 5;

while (pCur <= pEnd)

{

if ( (0 == pCur[0]) && (0 == pCur[1]) && (0 == pCur[2]) && (1 == pCur[3]) )

break;

pCur++;

}

if (pCur <= pEnd)

{

*pNaluSize = pCur - pOut;

return pOut;

}

*pNaluSize = Size - (pOut - pStart);

return pOut;

}

/* 返回的数据包括起始的4个字节0x00000001 */

static u8* FindNalu264(u8 *pStart, u32 Size, u8 *pNaluType, u32 *pNaluSize)

{

u8 *pEnd;

u8 *pCur;

u8 *pOut;

u8 NaluType;

if (4 >= Size)

return NULL;

/* 找第一个0x00000001 */

pCur = pStart;

pEnd = pStart + Size - 4;

while (pCur < pEnd)

{

if ((0 == pCur[0]) && (0 == pCur[1]) && (0 == pCur[2]) && (1 == pCur[3]))

break;

pCur++;

}

if (pCur >= pEnd)

return NULL;

NaluType = (pCur[4]) & 0x1f;

*pNaluType = NaluType;

if (1 == NaluType || 5 == NaluType) /* P帧、I帧, 假设每一包里P帧I帧都是最后一个 */

{

*pNaluSize = Size - (pCur - pStart);

return pCur;

}

pOut = pCur;

/* 找第二个0x00000001 */

pCur += 5;

while (pCur <= pEnd)

{

if ((0 == pCur[0]) && (0 == pCur[1]) && (0 == pCur[2]) && (1 == pCur[3]))

break;

pCur++;

}

if (pCur <= pEnd)

{

*pNaluSize = pCur - pOut;

return pOut;

}

*pNaluSize = Size - (pOut - pStart);

return pOut;

}

// aac数据时记得除掉adts头

static int AdtsDemux(unsigned char * data, unsigned int size, unsigned char** raw, int* raw_size)

{

int ret = 1;

if (size < 7) {

ttf_human_trace("adts: demux size too small");

return 0;

}

unsigned char* p = data;

unsigned char* pend = data + size;

//unsigned char* startp = 0;

while (p < pend) {

// decode the ADTS.

// @see aac-iso-13818-7.pdf, page 26

// 6.2 Audio Data Transport Stream, ADTS

// @see https://github.com/ossrs/srs/issues/212#issuecomment-64145885

// byte_alignment()

// adts_fixed_header:

// 12bits syncword,

// 16bits left.

// adts_variable_header:

// 28bits

// 12+16+28=56bits

// adts_error_check:

// 16bits if protection_absent

// 56+16=72bits

// if protection_absent:

// require(7bytes)=56bits

// else

// require(9bytes)=72bits

//startp = p;

// for aac, the frame must be ADTS format.

/*if (p[0] != 0xff || (p[1] & 0xf0) != 0xf0) {

ttf_human_trace("adts: not this format.");

return 0;

}*/

// syncword 12 bslbf

p++;

// 4bits left.

// adts_fixed_header(), 1.A.2.2.1 Fixed Header of ADTS

// ID 1 bslbf

// layer 2 uimsbf

// protection_absent 1 bslbf

int8_t pav = (*p++ & 0x0f);

//int8_t id = (pav >> 3) & 0x01;

/*int8_t layer = (pav >> 1) & 0x03;*/

int8_t protection_absent = pav & 0x01;

/**

* ID: MPEG identifier, set to '1' if the audio data in the ADTS stream are MPEG-2 AAC (See ISO/IEC 13818-7)

* and set to '0' if the audio data are MPEG-4. See also ISO/IEC 11172-3, subclause 2.4.2.3.

*/

//if (id != 0x01) {

// //ttf_human_trace("adts: id must be 1(aac), actual 0(mp4a).");

// // well, some system always use 0, but actually is aac format.

// // for example, houjian vod ts always set the aac id to 0, actually 1.

// // we just ignore it, and alwyas use 1(aac) to demux.

// id = 0x01;

//}

//else {

// //ttf_human_trace("adts: id must be 1(aac), actual 1(mp4a).");

//}

//int16_t sfiv = (*p << 8) | (*(p + 1));

p += 2;

// profile 2 uimsbf

// sampling_frequency_index 4 uimsbf

// private_bit 1 bslbf

// channel_configuration 3 uimsbf

// original/copy 1 bslbf

// home 1 bslbf

//int8_t profile = (sfiv >> 14) & 0x03;

//int8_t sampling_frequency_index = (sfiv >> 10) & 0x0f;

/*int8_t private_bit = (sfiv >> 9) & 0x01;*/

//int8_t channel_configuration = (sfiv >> 6) & 0x07;

/*int8_t original = (sfiv >> 5) & 0x01;*/

/*int8_t home = (sfiv >> 4) & 0x01;*/

//int8_t Emphasis; @remark, Emphasis is removed, @see https://github.com/ossrs/srs/issues/212#issuecomment-64154736

// 4bits left.

// adts_variable_header(), 1.A.2.2.2 Variable Header of ADTS

// copyright_identification_bit 1 bslbf

// copyright_identification_start 1 bslbf

/*int8_t fh_copyright_identification_bit = (fh1 >> 3) & 0x01;*/

/*int8_t fh_copyright_identification_start = (fh1 >> 2) & 0x01;*/

// frame_length 13 bslbf: Length of the frame including headers and error_check in bytes.

// use the left 2bits as the 13 and 12 bit,

// the frame_length is 13bits, so we move 13-2=11.

//int16_t frame_length = (sfiv << 11) & 0x1800;

//int32_t abfv = ((*p) << 16)

// | ((*(p + 1)) << 8)

// | (*(p + 2));

p += 3;

// frame_length 13 bslbf: consume the first 13-2=11bits

// the fh2 is 24bits, so we move right 24-11=13.

//frame_length |= (abfv >> 13) & 0x07ff;

// adts_buffer_fullness 11 bslbf

/*int16_t fh_adts_buffer_fullness = (abfv >> 2) & 0x7ff;*/

// number_of_raw_data_blocks_in_frame 2 uimsbf

/*int16_t number_of_raw_data_blocks_in_frame = abfv & 0x03;*/

// adts_error_check(), 1.A.2.2.3 Error detection

if (!protection_absent) {

if (size < 9) {

ttf_human_trace("adts: protection_absent disappare.");

return 0;

}

// crc_check 16 Rpchof

/*int16_t crc_check = */p += 2;

}

// TODO: check the sampling_frequency_index

// TODO: check the channel_configuration

// raw_data_blocks

/*int adts_header_size = p - startp;

int raw_data_size = frame_length - adts_header_size;

if (raw_data_size > pend - p) {

ttf_human_trace("adts: raw data size too little.");

return 0;

}*/

//adts_codec_.protection_absent = protection_absent;

//adts_codec_.aac_object = srs_codec_aac_ts2rtmp((SrsAacProfile)profile);

//adts_codec_.sampling_frequency_index = sampling_frequency_index;

//adts_codec_.channel_configuration = channel_configuration;

//adts_codec_.frame_length = frame_length;

@see srs_audio_write_raw_frame().

TODO: FIXME: maybe need to resample audio.

//adts_codec_.sound_format = 10; // AAC

//if (sampling_frequency_index <= 0x0c && sampling_frequency_index > 0x0a) {

// adts_codec_.sound_rate = SrsCodecAudioSampleRate5512;

//}

//else if (sampling_frequency_index <= 0x0a && sampling_frequency_index > 0x07) {

// adts_codec_.sound_rate = SrsCodecAudioSampleRate11025;

//}

//else if (sampling_frequency_index <= 0x07 && sampling_frequency_index > 0x04) {

// adts_codec_.sound_rate = SrsCodecAudioSampleRate22050;

//}

//else if (sampling_frequency_index <javascript= 0x04) {

// adts_codec_.sound_rate = SrsCodecAudioSampleRate44100;

//}

//else {

// adts_codec_.sound_rate = SrsCodecAudioSampleRate44100;

// //srs_warn("adts invalid sample rate for flv, rate=%#x", sampling_frequency_index);

//}

//adts_codec_.sound_type = srs_max(0, srs_min(1, channel_configuration - 1));

TODO: FIXME: finger it out the sound size by adts.

//adts_codec_.sound_size = 1; // 0(8bits) or 1(16bits).

// frame data.

*raw = p;

*raw_size = pend - p;

break;

}

return ret;

}

class MP4Writer

{

public:

MP4Writer();

~MP4Writer();

s32 CreatFile(char *strFileName);

s32 Init265(u32 TimeScale);

s32 Write265Sample(u8 *pData, u32 Size, u64 TimeStamp);

s32 Init264(u32 TimeScale);

s32 Write264Sample(u8 *pData, u32 Size, u64 TimeStamp);

s32 InitAAC(u8 AudioType, u32 SampleRate, u8 Channel, u32 TimeScale);

s32 WriteAACSample(u8 *pData, u32 Size, u64 TimeStamp);

void CloseFile();

private:

GF_ISOFile *m_ptFile;

u32 m_265TrackIndex;

u32 m_265StreamIndex;

u8 m_Video265Statue;

u32 m_264TrackIndex;

u32 m_264StreamIndex;

u8 m_Video264Statue;

s64 m_VideoTimeStampStart;

GF_HEVCConfig *m_ptHEVCConfig;

GF_HEVCParamArray* m_tHEVCNaluParam_VPS;

GF_HEVCParamArray* m_tHEVCNaluParam_SPS;

GF_HEVCParamArray* m_tHEVCNaluParam_PPS;

GF_AVCConfigSlot* m_tAVCConfig_VPS;

GF_AVCConfigSlot* m_tAVCConfig_SPS;

GF_AVCConfigSlot* m_tAVCConfig_PPS;

GF_AVCConfigSlot* m_tAVCConfig_SPS264;

GF_AVCConfigSlot* m_tAVCConfig_PPS264;

GF_AVCConfig *m_ptAVCConfig;

HEVCState *m_ptHEVCState;

AVCState *m_ptAVCState;

u32 m_AACTrackIndex;

u32 m_AACStreamIndex;

u8 m_AudioAACStatue;

s64 m_AudioTimeStampStart;

bool findfirsidr_;

void FreeAllMem();

};

MP4Writer::MP4Writer()

{

m_ptFile = NULL;

m_ptHEVCConfig = NULL;

m_ptAVCConfig = NULL;

m_Video265Statue = INIT_STATUS;

m_AudioAACStatue = INIT_STATUS;

m_Video264Statue = INIT_STATUS;

m_tHEVCNaluParam_VPS = NULL;

m_tHEVCNaluParam_SPS = NULL;

m_tHEVCNaluParam_PPS = NULL;

m_tAVCConfig_VPS = NULL;

m_tAVCConfig_SPS = NULL;

m_tAVCConfig_PPS = NULL;

m_tAVCConfig_SPS264 = NULL;

m_tAVCConfig_PPS264 = NULL;

m_ptHEVCState = NULL;

m_ptAVCState = NULL;

findfirsidr_ = false;

}

MP4Writer::~MP4Writer()

{

CloseFile();

}

s32 MP4Writer::CreatFile(char *strFileName)

{

if (NULL != m_ptFile)

{

return -1;

}

m_ptFile = gf_isom_open(strFileName, GF_ISOM_OPEN_WRITE, NULL);

if (NULL == m_ptFile)

{

return -1;

}

gf_isom_set_brand_info(m_ptFile, GF_ISOM_BRAND_MP42, 0);

return 0;

}

void MP4Writer::CloseFile()

{

if (m_ptFile)

{

gf_isom_close(m_ptFile);

m_ptFile = NULL;

FreeAllMem();

}

}

void MP4Writer::FreeAllMem()

{

if (m_ptHEVCConfig)

{

gf_odf_hevc_cfg_del(m_ptHEVCConfig);

//gf_list_del(m_ptHEVCConfig->param_array);

//free(m_ptHEVCConfig);

m_ptHEVCConfig = NULL;

}

if (m_ptAVCConfig) {

gf_odf_avc_cfg_del(m_ptAVCConfig);

//gf_list_del(m_ptAVCConfig->param_array);

//free(m_ptAVCConfig);

m_ptAVCConfig = NULL;

}

if (m_ptHEVCState) {

free(m_ptHEVCState);

m_ptHEVCState = NULL;

}

if (m_ptAVCState) {

free(m_ptAVCState);

m_ptAVCState = NULL;

}

m_tHEVCNaluParam_VPS = NULL;

m_tHEVCNaluParam_SPS = NULL;

m_tHEVCNaluParam_PPS = NULL;

m_tAVCConfig_VPS = NULL;

m_tAVCConfig_SPS = NULL;

m_tAVCConfig_PPS = NULL;

m_tAVCConfig_SPS264 = NULL;

m_tAVCConfig_PPS264 = NULL;

m_Video265Statue = INIT_STATUS;

m_AudioAACStatue = INIT_STATUS;

m_Video264Statue = INIT_STATUS;

findfirsidr_ = false;

}

s32 MP4Writer::Init265(u32 TimeScale)

{

if (NULL == m_ptFile || INIT_STATUS != m_Video265Statue)

return -1;

m_VideoTimeStampStart = -1;

/* 创建Track */

m_265TrackIndex = gf_isom_new_track(m_ptFile, 0, GF_ISOM_MEDIA_VISUAL, TimeScale);

if (0 == m_265TrackIndex)

return -1;

if (GF_OK != gf_isom_set_track_enabled(m_ptFile, m_265TrackIndex, 1))

return -1;

/* 创建流 */

m_ptHEVCConfig = gf_odf_hevc_cfg_new();

if (NULL == m_ptHEVCConfig)

returDGsCCdwoUvn -1;

m_ptHEVCConfig->nal_unit_size = 4;

m_ptHEVCConfig->configurationVersion = 1;

if (GF_OK != gf_isom_hevc_config_new(m_ptFile, m_265TrackIndex, m_ptHEVCConfig, NULL, NULL, &m_265StreamIndex))

return -1;

/* 初始化流的配置结构 */

GF_SAFEALLOC(m_tHEVCNaluParam_VPS, GF_HEVCParamArray);

GF_SAFEALLOC(m_tHEVCNaluParam_SPS, GF_HEVCParamArray);

GF_SAFEALLOC(m_tHEVCNaluParam_PPS, GF_HEVCParamArray);

GF_SAFEALLOC(m_tAVCConfig_VPS, GF_AVCConfigSlot);

GF_SAFEALLOC(m_tAVCConfig_SPS, GF_AVCConfigSlot);

GF_SAFEALLOC(m_tAVCConfig_PPS, GF_AVCConfigSlot);

if (GF_OK != gf_list_add(m_ptHEVCConfig->param_array, m_tHEVCNaluParam_VPS))

return -1;

if (GF_OK != gf_list_add(m_ptHEVCConfig->param_array, m_tHEVCNaluParam_SPS))

return -1;

if (GF_OK != gf_list_add(m_ptHEVCConfig->param_array, m_tHEVCNaluParam_PPS))

return -1;

m_tHEVCNaluParam_VPS->nalus = gf_list_new();

if (NULL == m_tHEVCNaluParam_VPS->nalus)

return -1;

m_tHEVCNaluParam_SPS->nalus = gf_list_new();

if (NULL == m_tHEVCNaluParam_SPS->nalus)

return -1;

m_tHEVCNaluParam_PPS->nalus = gf_list_new();

if (NULL == m_tHEVCNaluParam_PPS->nalus)

return -1;

m_tHEVCNaluParam_VPS->type = GF_HEVC_NALU_VID_PARAM;

m_tHEVCNaluParam_SPS->type = GF_HEVC_NALU_SEQ_PARAM;

m_tHEVCNaluParam_PPS->type = GF_HEVC_NALU_PIC_PARAM;

m_tHEVCNaluParam_VPS->array_completeness = 1;

m_tHEVCNaluParam_SPS->array_completeness = 1;

m_tHEVCNaluParam_PPS->array_completeness = 1;

if (GF_OK != gf_list_add(m_tHEVCNaluParam_VPS->nalus, m_tAVCConfig_VPS))

return -1;

if (GF_OK != gf_list_add(m_tHEVCNaluParam_SPS->nalus, m_tAVCConfig_SPS))

return -1;

if (GF_OK != gf_list_add(m_tHEVCNaluParam_PPS->nalus, m_tAVCConfig_PPS))

return -1;

m_ptHEVCState = (HEVCState *)malloc(sizeof(HEVCState));//gf_malloc

if (NULL == m_ptHEVCState)

return -1;

memset(m_ptHEVCState, 0, sizeof(HEVCState));

m_Video265Statue = CONFIG_STATUS;

return 0;

}

s32 MP4Writer::Write265Sample(u8 *pData, u32 Size, u64 TimeStamp)

{

u8 *pStart = pData;

u8 NaluType;

u32 NaluSize = 0;

s32 ID;

GF_ISOSample tISOSample;

/* 265还未初始化 */

if (INIT_STATUS == m_Video265Statue)

return -1;

while (1)

{

pData = FindNalu(pData + NaluSize, Size - (u32)(pData - pStart) - NaluSize, &NaluType, &NaluSize);

if (NULL == pData)

break;

/* 配置完成后只处理IP帧 */

if (CONFIG_FINISH == m_Video265Statue)

{

if (1 != NaluType && 19 != NaluType) /* P帧 I帧 */

continue;

if (-1 == m_VideoTimeStampStart)

m_VideoTimeStampStart = TimeStamp;

*((u32 *)pData) = htonl(NaluSize - 4); /* 这里的长度不能包括前四个字节的头! */

tISOSample.data = (char *)pData;

tISOSample.dataLength = NaluSize;

tISOSample.IsRAP = (19 == NaluType)? RAP: RAP_NO;

tISOSample.DTS = TimeStamp - m_VideoTimeStampStart;

tISOSample.CTS_Offset = 0;

tISOSample.nb_pack = 0;

if (GF_OK != gf_isom_add_sample(m_ptFile, m_265TrackIndex, m_265StreamIndex, &tISOSample))

{

*((u32 *)pData) = htonl(1); /* 恢复0x00000001的头 */

return -1;

}

*((u32 *)pData) = htonl(1); /* 恢复0x00000001的头 */

}

/* 配置未完成时只处理vps sps ppsDGsCCDWoUv头 */

else if (CONFIG_STATUS == m_Video265Statue)

{

pData += 4;

NaluSize -= 4;

if (32 == NaluType && NULL == m_tAVCConfig_VPS->data) /* VPS */

{

ID = gf_media_hevc_read_vps((char *)pData , NaluSize, m_ptHEVCState);

m_ptHEVCConfig->avgFrameRate = m_ptHEVCState->vps[ID].rates[0].avg_pic_rate;

m_ptHEVCConfig->temporalIdNested = m_ptHEVCState->vps[ID].temporal_id_nesting;

m_ptHEVCConfig->constantFrameRate = m_ptHEVCState->vps[ID].rates[0].constand_pic_rate_idc;

m_ptHEVCConfig->numTemporalLayers = m_ptHEVCState->vps[ID].max_sub_layers;

m_tAVCConfig_VPS->id = ID;

m_tAVCConfig_VPS->size = (u16)NaluSize;

m_tAVCConfig_VPS->data = (char *)malloc(NaluSize);

if (NULL == m_tAVCConfig_VPS->data)

continue;

memcpy(m_tAVCConfig_VPS->data, pData, NaluSize);

}

else if (33 == NaluType && NULL == m_tAVCConfig_SPS->data) /* SPS */

{

ID = gf_media_hevc_read_sps((char *)pData, NaluSize, m_ptHEVCState);

m_ptHEVCConfig->tier_flag = m_ptHEVCState->sps[ID].ptl.tier_flag;

m_ptHEVCConfig->profile_idc = m_ptHEVCState->sps[ID].ptl.profile_idc;

m_ptHEVCConfig->profile_space = m_ptHEVCState->sps[ID].ptl.profile_space;

m_tAVCConfig_SPS->id = ID;

m_tAVCConfig_SPS->size = (u16)NaluSize;

m_tAVCConfig_SPS->data = (char *)malloc(NaluSize);

if (NULL == m_tAVCConfig_SPS->data)

continue;

memcpy(m_tAVCConfig_SPS->data, pData, NaluSize);

gf_isom_set_visual_info(m_ptFile, m_265TrackIndex, m_265StreamIndex, m_ptHEVCState->sps[ID].width, m_ptHEVCState->sps[ID].height);

}

else if (34 == NaluType && NULL == m_tAVCConfig_PPS->data) /* PPS */

{

ID = gf_media_hevc_read_pps((char *)pData, NaluSize, m_ptHEVCState);

m_tAVCConfig_PPS->id = ID;

m_tAVCConfig_PPS->size = (u16)NaluSize;

m_tAVCConfig_PPS->data = (char *)malloc(NaluSize);

if (NULL == m_tAVCConfig_PPS->data)

continue;

memcpy(m_tAVCConfig_PPS->data, pData, NaluSize);

}

else

{

continue;

}

if (m_tAVCConfig_VPS->data &开发者_JAVA入门& m_tAVCConfig_SPS->data && m_tAVCConfig_PPS->data)

{

gf_isom_hevc_config_update(m_ptFile, m_265TrackIndex, m_265StreamIndex, m_ptHEVCConfig);

m_Video265Statue = CONFIG_FINISH;

if (m_ptHEVCState) {

free(m_ptHEVCState);

m_ptHEVCState = NULL;

}

}

}

}

return 0;

}

s32 MP4Writer::Init264(u32 TimeScale)

{

if (NULL == m_ptFile || INIT_STATUS != m_Video265Statue)

return -1;

m_VideoTimeStampStart = -1;

/* 创建Track */

m_264TrackIndex = gf_isom_new_track(m_ptFile, 0, GF_ISOM_MEDIA_VISUAL, TimeScale);

if (0 == m_264TrackIndex)

return -1;

if (GF_OK != gf_isom_set_track_enabled(m_ptFile, m_264TrackIndex, 1))

return -1;

/* 创建流 */

m_ptAVCConfig = gf_odf_avc_cfg_new();

if (NULL == m_ptAVCConfig)

return -1;

//m_ptAVCConfig->nal_unit_size = 4;

m_ptAVCConfig->configurationVersion = 1;

if (GF_OK != gf_isom_avc_config_new(m_ptFile, m_264TrackIndex, m_ptAVCConfig, NULL, NULL, &m_264StreamIndex))

return -1;

/* 初始化流的配置结构 */

GF_SAFEALLOC(m_tAVCConfig_SPS264, GF_AVCConfigSlot);

GF_SAFEALLOC(m_tAVCConfig_PPS264, GF_AVCConfigSlot);

gf_list_add(m_ptAVCConfig->sequenceParameterSets, m_tAVCConfig_SPS264);

gf_list_add(m_ptAVCConfig->pictureParameterSets, m_tAVCConfig_PPS264);

m_ptAVCState = (AVCState *)malloc(sizeof(AVCState));//gf_malloc

if (NULL == m_ptAVCState)

return -1;

memset(m_ptAVCState, 0, sizeof(AVCState));

m_Video264Statue = CONFIG_STATUS;

return 0;

}

s32 MP4Writer::Write264Sample(u8 *pData, u32 Size, u64 TimeStamp)

{

u8 *pStart = pData;

u8 NaluType;

u32 NaluSize = 0;

s32 ID;

GF_ISOSample tISOSample;

/* 265还未初始化 */

if (INIT_STATUS == m_Video264Statue)

return -1;

while (1)

{

pData = FindNalu264(pData + NaluSize, Size - (u32)(pData - pStart) - NaluSize, &NaluType, &NaluSize);

if (NULL == pData)

break;

/* 配置完成后只处理IP帧 */

if (CONFIG_FINISH == m_Video264Statue)

{

if (!findfirsidr_) {

if (5 != NaluType)

continue;

else

findfirsidr_ = true;

}

if (1 != NaluType && 5 != NaluType) /* P帧 I帧 */

continue;

if (-1 == m_VideoTimeStampStart)

m_VideoTimeStampStart = TimeStamp;

*((u32 *)pData) = htonl(NaluSize - 4); /* 这里的长度不能包括前四个字节的头! */

tISOSample.data = (char *)pData;

tISOSample.dataLength = NaluSize;

tISOSample.IsRAP = (5 == NaluType) ? RAP : RAP_NO;

tISOSample.DTS = TimeStamp - m_VideoTimeStampStart;

tISOSample.CTS_Offset = 0;

tISOSample.nb_pack = 0;

int ret = gf_isom_add_sample(m_ptFile, m_264TrackIndex, m_264StreamIndex, &tISOSample);

if (GF_OK != ret)

{

//*((u32 *)pData) = htonl(1); /* 恢复0x00000001的头 */

return -1;

}

//*((u32 *)pData) = htonl(1); /* 恢复0x00000001的头 */

}

/* 配置未完成时只处理vps sps pps头 */

else if (CONFIG_STATUS == m_Video264Statue)

{

pData += 4;

NaluSize -= 4;

if (7 == NaluType && NULL == m_tAVCConfig_SPS264->data) /* SPS */

{

ID = gf_media_avc_read_sps((char *)pData, NaluSize, m_ptAVCState,0,NULL);

m_ptAVCConfig->AVCProfileIndication = m_ptAVCState->sps[ID].profile_idc;

m_ptAVCConfig->profile_compatibility = m_ptAVCState->sps[ID].prof_compat;

m_ptAVCConfig->AVCLevelIndication = m_ptAVCState->sps[ID].level_idc;

m_tAVCConfig_SPS264->id = ID;

m_tAVCConfig_SPS264->size = (u16)NaluSize;

m_tAVCConfig_SPS264->data = (char *)malloc(NaluSize);

if (NULL == m_tAVCConfig_SPS264->data)

continue;

memcpy(m_tAVCConfig_SPS264->data, pData, NaluSize);

gf_isom_set_visual_info(m_ptFile, m_264TrackIndex, m_264StreamIndex, m_ptAVCState->sps[ID].width, m_ptAVCState->sps[ID].height);

}

else if (8 == NaluType && NULL == m_tAVCConfig_PPS264->data) /* PPS */

{

m_tAVCConfig_PPS264->id = ID;

m_tAVCConfig_PPS264->size = (u16)NaluSize;

m_tAVCConfig_PPS264->data = (char *)malloc(NaluSize);

if (NULL == m_tAVCConfig_PPS264->data)

continue;

memcpy(m_tAVCConfig_PPS264->data, pData, NaluSize);

}

else

{

continue;

}

if (m_tAVCConfig_SPS264->data && m_tAVCConfig_PPS264->data)

{

gf_isom_avc_config_update(m_ptFile, m_264TrackIndex, m_264StreamIndex, m_ptAVCConfig);

m_Video264Statue = CONFIG_FINISH;

if (m_ptAVCState) {

free(m_ptAVCState);

m_ptAVCState = NULL;

}

}

}

}

return 0;

}

s32 MP4Writer::InitAAC(u8 AudioType, u32 SampleRate, u8 Channel, u32 TimeScale)

{

GF_ESD *ptStreamDesc;

s8 SampleRateID;

u16 AudioConfig = 0;

u8 AACProfile;

s32 res = 0;

if (NULL == m_ptFile || INIT_STATUS != m_AudioAACStatue)

return -1;

m_AudioTimeStampStart = -1;

/* 创建Track */

m_AACTrackIndex = gf_isom_new_track(m_ptFile, 0, GF_ISOM_MEDIA_AUDIO, TimeScale);

if (0 == m_AACTrackIndex)

return -1;

if (GF_OK != gf_isom_set_track_enabled(m_ptFile, m_AACTrackIndex, 1))

return -1;

/* 创建并配置流 */

SampleRateID = GetSampleRateID(SampleRate);

if (0 > SampleRateID)

return -1;

GetAudioSpecificConfig(AudioType, (u8)SampleRateID, Channel, (u8*)(&AudioConfig), ((u8*)(&AudioConfig))+1);

ptStreamDesc = gf_odf_desc_esd_new(SLPredef_MP4);

ptStreamDesc->slConfig->timestampResolution = TimeScale;

ptStreamDesc->decoderConfig->streamType = GF_STREAM_AUDIO;

//ptStreamDesc->decoderConfig->bufferSizeDB = 20; //这参数干什么的

ptStreamDesc->decoderConfig->objectTypeIndication = 0x40;//ptStreamDesc->decoderConfig->objectTypeIndication = GPAC_OTI_AUDIO_AAC_MPEG4;

ptStreamDesc->decoderConfig->decoderSpecificInfo->dataLength = 2;

ptStreamDesc->decoderConfig->decoderSpecificInfo->data = (char *)&AudioConfig;

ptStreamDesc->ESID = gf_isom_get_track_id(m_ptFile, m_AACTrackIndex);

if (GF_OK != gf_isom_new_mpeg4_description(m_ptFile, m_AACTrackIndex, ptStreamDesc, NULL, NULL, &m_AACStreamIndex))

{

res = -1;

goto ERR;

}

if (gf_isom_set_audio_info(m_ptFile, m_AACTrackIndex, m_AACStreamIndex, SampleRate, Channel, 16, GF_IMPORT_AUDIO_SAMPLE_ENTRY_NOT_SET))

{

res = -1;

goto ERR;

}

AACProfile = GetAACProfile(AudioType, SampleRate, Channel);

gf_isom_set_pl_indication(m_ptFile, GF_ISOM_PL_AUDIO, AACProfile);

m_AudioAACStatue = CONFIG_FINISH;

ERR:

ptStreamDesc->decoderConfig->decoderSpecificInfo->data = NULL;

gf_odf_desc_del((GF_Descriptor *)ptStreamDesc);

return res;

}

s32 MP4Writer::WriteAACSample(u8 *www.devze.compData, u32 Size, u64 TimeStamp)

{

GF_ISOSample tISOSample;

if (CONFIG_FINISH != m_AudioAACStatue)

return 0;

if (!findfirsidr_) {

return 0;

}

if (-1 == m_AudioTimeStampStart)

m_AudioTimeStampStart = TimeStamp;

tISOSample.IsRAP = RAP;

tISOSample.dataLength = Size;

tISOSample.data = (char *)pData;

tISOSample.DTS = TimeStamp - m_AudioTimeStampStart;

tISOSample.CTS_Offset = 0;

tISOSample.nb_pack = 0;

if (GF_OK != gf_isom_add_sample(m_ptFile, m_AACTrackIndex, m_AACStreamIndex, &tISOSample))

return -1;

return 0;

}

extern "C" {

void* MP4_Init()

{

return (void *)(new MP4Writer());

}

s32 MP4_CreatFile(void *pCMP4Writer, char *strFileName)

{

if (NULL == pCMP4Writer)

return -1;

return ((MP4Writer *)pCMP4Writer)->CreatFile(strFileName);

}

s32 MP4_InitVideo265(void *pCMP4Writer, u32 TimeScale)

{

if (NULL == pCMP4Writer)

return -1;

return ((MP4Writer *)pCMP4Writer)->Init265(TimeScale);

}

s32 MP4_Write265Sample(void *pCMP4Writer, u8 *pData, u32 Size, u64 TimeStamp)

{

if (NULL == pCMP4Writer)

return -1;

return ((MP4Writer *)pCMP4Writer)->Write265Sample(pData, Size, TimeStamp);

}

s32 MP4_InitVideo264(void * pCMP4Writer, u32 TimeScale)

{

if (NULL == pCMP4Writer)

return -1;

return ((MP4Writer *)pCMP4Writer)->Init264(TimeScale);

}

s32 MP4_Write264Sample(void * pCMP4Writer, u8 * pData, u32 Size, u64 TimeStamp)

{

if (NULL == pCMP4Writer)

return -1;

return ((MP4Writer *)pCMP4Writer)->Write264Sample(pData, Size, TimeStamp);

}

s32 MP4_InitAudioAAC(void *pCMP4Writer, u8 AudioType, u32 SampleRate, u8 Channel, u32 TimeScale)

{

if (NULL == pCMP4Writer)

return -1;

return ((MP4Writer *)pCMP4Writer)->InitAAC(AudioType, SampleRate, Channel, TimeScale);

}

s32 MP4_WriteAACSample(void *pCMP4Writer, u8 *pData, u32 Size, u64 TimeStamp)

{

if (NULL == pCMP4Writer)

return -1;

return ((MP4Writer *)pCMP4Writer)->WriteAACSample(pData, Size, TimeStamp);

}

void MP4_CloseFile(void *pCMP4Writer)

{

if (NULL == pCMP4Writer)

return;

((MP4Writer *)pCMP4Writer)->CloseFile();

}

void MP4_Exit(void *pCMP4Writer)

{

if (NULL == pCMP4Writer)

return;

delete pCMP4Writer;

}

}

注意:

1)MP4_InitAudioAAC那个audiotype指的是aac的profile,lc就是2。

GF_M4A_AAC_MAIN = 1,

GF_M4A_AAC_LC = 2,

GF_M4A_AAC_SSR = 3,

GF_M4A_AAC_LTP = 4,

GF_M4A_AAC_SBR = 5,

GF_M4A_AAC_ScalaBLE = 6,

GF_M4A_TWINVQ = 7,

GF_M4A_CELP = 8,

GF_M4A_HVXC = 9,

GF_M4A_TTSI = 12,

GF_M4A_MAIN_SYNTHETIC = 13,

GF_M4A_WAVETABLE_SYNTHESIS = 14,

GF_M4A_GENERAL_MIDI = 15,

GF_M4A_ALGO_SYNTH_AUDIO_FX = 16,

GF_M4A_ER_AAC_LC = 17,

GF_M4A_ER_AAC_LTP = 19,

GF_M4A_ER_AAC_SCALABLE = 20,

GF_M4A_ER_TWINVQ = 21,

GF_M4A_ER_BSAC = 22,

GF_M4A_ER_AAC_LD = 23,

GF_M4A_ER_CELP = 24,

GF_M4A_ER_HVXC = 25,

GF_M4A_ER_HILN = 26,

GF_M4A_ER_PARAMETRIC = 27,

GF_M4A_SSC = 28,

GF_M4A_AAC_PS = 29,

GF_M4A_LAYER1 = 32,

GF_M4A_LAYER2 = 33,

GF_M4A_LAYER3 = 34,

GF_M4A_DST = 35,

GF_M4A_ALS = 36

2)填aac数据时记得除掉adts头,使用上面的AdtsDemux函数即可。

二、写h264流数据为mp4文件步骤

void * handleMp4 = NULL; handleMp4 = MP4_Init(); Mp4_CreateFile(handleMp4,"d:\\test.mp4"); MP4_InitVideo264(handleMp4,1000); //写h264流数据,包含00 00 00 01标记头的数据 MP4_Write264Sample(handleMp4,h264data,datasize,timestamp);//h264data表示264数据,datasize表示数据大小,timestamp表示时间戳,需要转换成基准为1000时间戳(1000\90000两种) ... MP4_CloseFile(handleMp4); MP4_Exit(handleMp4);

三、写h265流数据为mp4文件步骤

void * handleMp4 = NULL; handleMp4 = MP4_Init(); Mp4_CreateFile(handleMp4,"d:\\test.mp4"); MP4_InitVideo265(handleMp4,1000); //写h265流数据,包含00 00 00 01标记头的数据 MP4_Write265Sample(handleMp4,h264data,datasize,timestamp);//h264data表示264数据,datasize表示数据大小,timestamp表示时间戳,需要转换成基准为1000时间戳 ... MP4_CloseFile(handleMp4); MP4_Exit(handleMp4);

四、利用命令行生成mp4文件

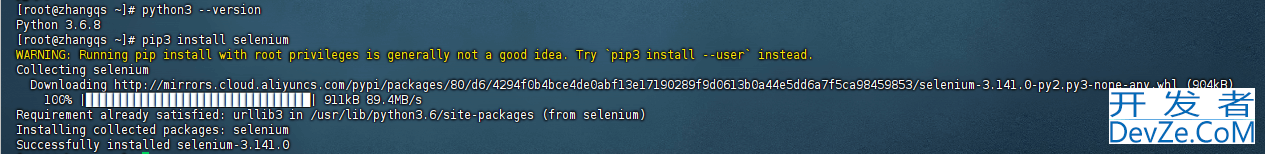

GPAC除了 GPAC 核心,完整的软件包还包括一个 GPAC 插件:MPEG-4 BIF(场景解码器)、MPEG-4 ODF(对象描述符解码器)、MPEG-4 LASeR(场景解码器)、MPEG-4 SAF 解复用器、文本 MPEG- 4 加载器(支持未压缩的 MPEG-4 BT 和 XMT、VRML 和 X3D 文本格式)、图像包(支持 PNG、JPEG、BMP、JPEG2000)等。

1)将h265流转换成MP4,执行下面命令行。

./MP4Box -v -add Catus_1920x1080_50_qp32.bin:FMT=HEVC -fps 50 -new output.mp4

./MP4Box -add name_of_annexB_bitstream.(bit,bin,265) -fps 50 -new output.mp4

播放h265视频文件信息,执行下面命令。

./MP4Client output.mp4

2)将h265流转换成ts,执行下面命令行。

./mp42ts -prog=hevc.mp4 -dst-file=test.ts

播放h265视频文件流信息,执行下面命令。

./MP4Client test.ts

备注:

1、GPAC模块下载链接

GitHub - gpac/gpac: Modular Multimedia framework for packaging, streaming and playing your favorite content, see http://netflix.gpac.io或Downloads | GPAC

2、编译指导

Build Introduction · gpac/gpac Wiki · GitHub

Windows:GPAC Build Guide for Windows · gpac/gpac Wiki · GitHub

linux:GPAC Build Guide for Linux · gpac/gpac Wiki · GitHub

到此这篇关于C++利用GPAC实现生成MP4文件的示例代码的文章就介绍到这了,更多相关C++ GPAC生成MP4文件内容请搜索我们以前的文章或继续浏览下面的相关文章希望大家以后多多支持我们!

加载中,请稍侯......

加载中,请稍侯......

精彩评论