目录

- 前言

- Choreographer的创建流程

- VSYNC信号的调度分发流程

- VSYNC信号的请求

- VSYNC信号的分发

- VSYNC信号的处理

- 总结

前言

android应用的UI界面需要多个角色共同协作才能完成渲染上屏,最终呈现到屏幕上被用户看到。其中包括App进程的测量、布局和绘制,SurfaceFlinger进程的渲染数据合成等。而App进程何时开始测量、布局和绘制,SurfaceFlinger进程何时开始数据的合成和上屏,决定了Android系统能否有条不紊地刷新屏幕内容,给用户提供流畅的使用体验。

为了协调App进程的视图数据生产和SurfaceFlinger进程的视图数据消费处理,Android系统引入Choreographer对App进程的布局绘制工作和SurfaceFlinger进程的数据合成工作进行调度,减少因为Android应用绘制渲染和屏幕刷新之间不同步导致的屏幕撕裂(tearing)问题。

本文从Choreographer的创建流程和Choreographer的VSYNC请求、分发以及处理两个方面来分析Choreographer机制的工作原理。

说明:本文中的源码对应的Android版本是Android 13。

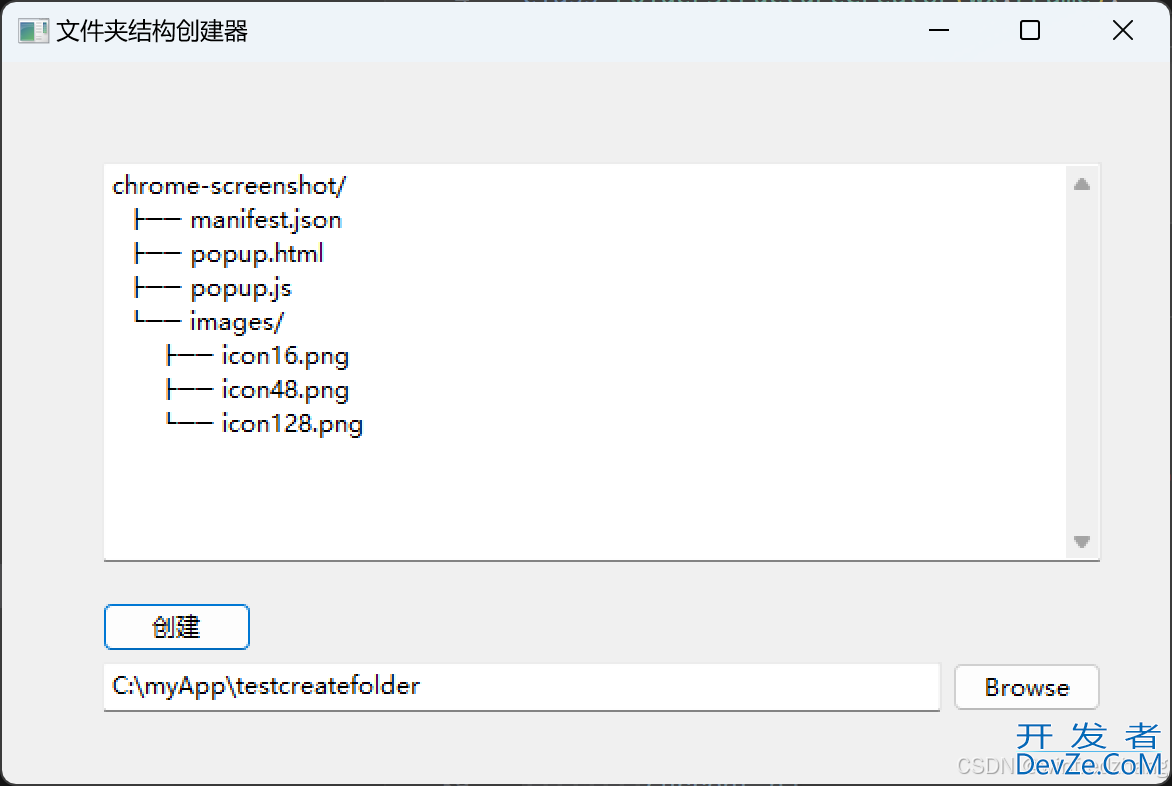

Choreographer的创建流程

先给出Choreographer的创建时机:Android中启动一个Activity时,在SystemServer进程在ActivityTaskSupervisor#realStartActivityLocked方法中通过Binder跨进程调用到App进程,在App进程调用了IApplicationThread#scheduleTransaction,最终执行完Activity#onResume方法之后会调用ViewManager#addView,最终创建了ViewRootImpl实例,并在ViewRootImpl的构造函数中完成了Choreographer实例创建,并作为ViewRootImpl的成员变量持有。

SystemServer进程收到App进程启动Activity的Binder请求后,在ActivityTaskSupervisor#realStartActivityLocked方法中封装LaunchActivityItem和ResumeActivityItem并通过ClientLifecycleManager#scheduleTransaction调度执行任务,最终还是通过App进程的IApplicationThread(Binder实例)跨进程调用到App进程的ApplicationThread#scheduleTransaction方法。

// com.android.server.wm.ActivityTaskSupervisor#realStartActivityLocked

boolean realStartActivityLocked(ActivityRecord r, WindowprocessController proc, boolean andResume, boolean checkConfig) throws RemoteException {

// ...

// Create activity launch transaction.

final ClientTransaction clientTransaction = ClientTransaction.obtain(proc.getThread(), r.token);

// ...

clientTransaction.addCallback(LaunchActivityItem.obtain(new Intent(r.intent), System.identityHashCode(r), r.info, mergedConfiguration.getGlobalConfiguration(), mergedConfiguration.getOverrideConfiguration(), r.compat, r.getFilteredReferrer(r.launchedFromPackage), task.voiceInteractor, proc.getReportedProcState(), r.getSavedState(), r.getPersistentSavedState(), results, newIntents, r.takeOptions(), isTransitionForward, proc.createProfilerInfoIfNeeded(), r.assistToken, activityClientController, r.shareableActivityToken, r.getLaunchedFromBubble(), fragmentToken));

// Set desired final state.

final ActivityLifecycleItem lifecycleItem;

if (andResume) {

lifecycleItem = ResumeActivityItem.obtain(isTransitionForward);

} else {

lifecycleItem = PauseActivityItem.obtain();

}

clientTransaction.setLifecycleStateRequest(lifecycleItem);

// Schedule transaction.

mService.getLifecycleManager().scheduleTransaction(clientTransaction);

// ...

}

// android.app.ClientTransactionHandler#scheduleTransaction

void scheduleTransaction(ClientTransaction transaction) throws RemoteException {

final IApplicationThread client = transaction.getClient();

transaction.schedule();

if (!(client instanceof Binder)) {

// If client is not an instance of Binder - it's a remote call and at this point it is

// safe to recycle the object. All objects used for local calls will be recycled after

// the transaction is executed on client in ActivityThread.

transaction.recycle();

}

}

// android.app.servertransaction.ClientTransaction#schedule

/**

* 当transaction初始化之后开始调度执行,将会被发送给client端并按照以下顺序进行处理:

* 1. 调用preExecute(ClientTransactionHandler)

* 2. 调度transaction对应的消息

* 3. 调用TransactionExecutor#execute(ClientTransaction)

*/

public void schedule() throws RemoteException {

mClient.scheduleTransaction(this); // mClient为App进程通过Binder通信传递的Binder句柄IApplicationThread。

}

此时调用链已经传递到App进程,由ActivityThread的ApplicationThread类型的成员变量进行处理,而ApplicationThread#scheduleTransaction方法直接调用了外部类ActivityThread的scheduleTransaction方法,相当于直接转发给ActivityThread#scheduleTransaction。而ActivityThread#scheduleTransaction通过主线程的Handler对象mH向主线程的MessageQueue中插入了一个EXECUTE_TRANSACTION消息。此时,App进程在主线程中处理EXECUTE_TRANSACTION消息。

/**

* 负责管理应用进程中的主线程任务,按照SystemServer进程请求的那样对任务进行调度和执行。

*/

public final class ActivityThread extends ClientTransactionHandler

implements ActivityThreadInternal {

@UnsupportedAppUsage

final ApplicationThread mAppThread = new ApplicationThread();

@UnsupportedAppUsage

final Looper mLooper = Looper.myLooper();

@UnsupportedAppUsage

final H mH = new H();

// An executor that performs multi-step transactions.

private final TransactionExecutor mTransactionExecutor = new TransactionExecutor(this);

// ...

private class ApplicationThread extends IApplicationThread.Stub {

// ...

@Override

public void scheduleTransaction(ClientTransaction transaction) throws RemoteException {

ActivityThread.this.scheduleTransaction(transaction);

}

// ...

}

// from the class ClientTransactionHandler that is the base class of ActivityThread

/** Prepare and schedule transaction for execution. */

void scheduleTransaction(ClientTransaction transaction) {

transaction.preExecute(this);

sendMessage(ActivityThread.H.EXECUTE_TRANSACTION, transaction);

}

private void sendMessage(int what, Object obj, int arg1, int arg2, boolean async) {

if (DEBUG_MESSAGES) {

Slog.v(TAG,

"SCHEDULE " + what + " " + mH.codeToString(what) + ": " + arg1 + " / " + obj);

}

Message msg = Message.obtain();

msg.what = what;

msg.obj = obj;

msg.arg1 = arg1;

msg.arg2 = arg2;

if (async) {

msg.setAsynchronous(true);

}

// 主线程的Handler

mH.sendMessage(msg);

}

class H extends Handler {

public void handleMessage(Message msg) {

// ...

switch (msg.what) {

// ...

case EXECUTE_TRANSACTION:

final ClientTransaction transaction = (ClientTransaction) msg.obj;

mTransactionExecutor.execute(transaction);

if (isSystem()) {

// Client transactions inside system process are recycled on the client side

// instead of ClientLifecycleManager to avoid being cleared before this

// message is handled.

transaction.recycle();

}

// TODO(lifecycler): Recycle locally scheduled transactions.

break;

// ...

}

// ...

}

}

主线程调用TransactionExecutor#execute方法处理消息,并在executeCallbacks方法中执行了ResumeActivityItem

/**

* Class that manages transaction execution in the correct order.

*/

public class TransactionExecutor {

// ...

/**

* Resolve transaction.

* First all callbacks will be executed in the order they appear in the list. If a callback

* requires a certain pre- or post-execution state, the client will be transitioned accordingly.

* Then the client will cycle to the final lifecycle state if provided. Otherwise, it will

* either remain in the initial state, or last state needed by a callback.

*/

public void execute(ClientTransaction transaction) {

// ...

// 实际执行生命周期任务的地方

executeCallbacks(transaction);

executeLifecycleState(transaction);

mPendingActions.clear();

// ...

}

/** Cycle through all states requested by callbacks and execute them at proper times. */

@VisibleForTesting

public void executeCallbacks(ClientTransaction transaction) {

// 取出之前SystemServer进程放入的LaunchActivityItem和ResumeActivityItem

final List<ClientTransactionItem> callbacks = transaction.getCallbacks();

if (callbacks == null || callbacks.isEmpty()) {

// No callbacks to execute, return early.

return;

}

if (DEBUG_RESOLVER) Slog.d(TAG, tId(transaction) + "Resolving callbacks in transaction");

final IBinder token = transaction.getActivityToken();

ActivityClientRecord r = mTransactionHandler.getActivityClient(token);

// In case when post-execution state of the last callback matches the final state requested

// for the activity in this transaction, we won't do the last transition here and do it when

// moving to final state instead (because it may contain additional parameters from server).

final ActivityLifecycleItem finalStateRequest = transaction.getLifecycleStateRequest();

final int finalState = finalStateRequest != null ? finalStateRequest.getTargetState()

: UNDEFINED;

// Index of the last callback that requests some post-execution state.

final int lastCallbackRequestingState = lastCallbackRequestingState(transaction);

final int size = callbacks.size();

for (int i = 0; i < size; ++i) {

final ClientTransactionItem item = callbacks.get(i);

if (DEBUG_RESOLVER) Slog.d(TAG, tId(transaction) + "Resolving callback: " + item);

fwww.devze.cominal int postExecutionState = item.getPostExecutionState();

final int closestPreExecutionState = mHelper.getClosestPreExecutionState(r,

item.getPostExecutionState());

if (closestPreExecutionState != UNDEFINED) {

cycleToPath(r, closestPreExecutionState, transaction);

}

// 执行LaunchActivityItem和ResumeActivityItem的execute方法

item.execute(mTransactionHandler, token, mPendingActions);

item.postExecute(mTransactionHandler, token, mPendingActions);

if (r == null) {

// Launch activity request will create an activity record.

r = mTransactionHandler.getActivityClient(token);

}

if (postExecutionState != UNDEFINED && r != null) {

// Skip the very last transition and perform it by explicit state request instead.

final boolean shouldExcludeLastTransition =

i == lastCallbackRequestingState && finalState == postExecutionState;

cycleToPath(r, postExecutionState, shouldExcludeLastTransition, transaction);

}

}

}

// ...

}

最终调用到了ActivityThread#handleResumeActivity方法,执行了resume,并调用的addView将DecorView和WindowManagerImpl进行关联。

/**

* Request to move an activity to resumed state.

* @hide

*/

public class ResumeActivityItem extends ActivityLifecycleItem {

private static final String TAG = "ResumeActivityItem";

// ...

@Override

public void execute(ClientTransactionHandler client, ActivityClientRecord r,

PendingTransactionActions pendingActions) {

Trace.traceBegin(TRACE_TAG_ACTIVITY_MANAGER, "activityResume");

// client是android.app.ActivityThread

client.handleResumeActivity(r, true /* finalStateRequest */, mIsForward,

"RESUME_ACTIVITY");

Trace.traceEnd(TRACE_TAG_ACTIVITY_MANAGER);

}

// ...

}

// android.app.ActivityThread#handleResumeActivity

@Override

public void handleResumeActivity(ActivityClientRecord r, boolean finalStateRequest,

boolean isForward, String reason) {

// If we are getting ready to gc after going to the background, well

// we are back active so skip it.

unscheduleGcIdler();

mSomeActivitiesChanged = true;

// 执行Activity的Resume方法

if (!performResumeActivity(r, finalStateRequest, reason)) {

return;

}

// ...

if (r.window == null && !a.mFinished && willBeVisible) {

r.window = r.activity.getWindow();

View decor = r.window.getDecorView();

decor.setVisibility(View.INVISIBLE);

ViewManager wm = a.getWindowManager();

WindowManager.LayoutParams l = r.window.getAttributes();

a.mDecor = decor;

l.type = WindowManager.LayoutParams.TYPE_BASE_APPLICATION;

l.softInputMode |= forwardBit;

if (r.mPreserveWindow) {

a.mWindowAdded = true;

r.mPreserveWindow = false;

// Normally the ViewRoot sets up callbacks with the Activity

// in addView->ViewRootImpl#setView. If we are instead reusing

// the decor view we have to notify the view root that the

// callbacks may have changed.

ViewRootImpl impl = decor.getViewRootImpl();

if (impl != null) {

impl.notifyChildRebuilt();

}

}

if (a.mVisibleFromClient) {

if (!a.mWindowAdded) {

a.mWindowAdded = true;

// 将DecorView和WindowManagerImpl进行关联

wm.addView(decor, l);

} else {

// The activity will get a callback for this {@link LayoutParams} change

// earlier. However, at that time the decor will not be set (this is set

// in this method), so no action will be taken. This call ensures the

// callback occurs with the decor set.

a.onWindowAttributesChanged(l);

}

}

// If the window has already been added, but during resume

// we started another activity, then don't yet make the

// window visible.

} else if (!willBeVisible) {

if (localLOGV) Slog.v(TAG, "Launch " + r + " mStartedActivity set");

r.hideForNow = true;

}

// ...

Looper.myQueue().addIdleHandler(new Idler());

}

在addView方法中创建了ViewRootImpl实例,并将ViewRootImpl实例和DecorView实例进行维护,最后调用setView将DecorView和ViewRootImpl进行关联,后续由ViewRootImpl作为桥梁来间接和DecorView进行交互。

/**

* Provides low-level communication with the system window manager for

* operations that are not associated with any particular context.

*

* This class is only used internally to implement global functions where

* the caller already knows the display and relevant compatibility information

* for the operation. For most purposes, you should use {@link WindowManager} instead

* since it is bound to a context.

*

* @see WindowManagerImpl

* @hide

*/

public final class WindowManagerGlobal {

@UnsupportedAppUsage

private final ArrayList<View> mViews = new ArrayList<View>();

@UnsupportedAppUsage

private final ArrayList<ViewRootImpl> mRoots = new ArrayList<ViewRootImpl>();

@UnsupportedAppUsage

private final ArrayList<WindowManager.LayoutParams> mParams = new ArrayList<WindowManager.LayoutParams>();

public void addView(View view, ViewGroup.LayoutParams params,

Display display, Window parentWindow, int userId) {

// ...

final WindowManager.LayoutParams wparams = (WindowManager.LayoutParams) params;

ViewRootImpl root;

View panelParentView = null;

synchronized (mLock) {

// ...

IWindowsession windowlessSession = null;

// ...

// 创建ViewRootImpl实例

if (windowlessSession == null) {

root = new ViewRootImpl(view.getContext(), display);

} else {

root = new ViewRootImpl(view.getContext(), display, windowlessSession);

}

view.setLayoutParams(wparams);

// 维护DecorView、ViewRootImpl实例

mViews.add(view);

mRoots.add(root);

mParams.add(wparams);

// do this last because it fires off messages to start doing things

try {

// 调用setView将DecorView和ViewRootImpl进行关联,后续由ViewRootImpl作为桥梁来间接和DecorView进行交互

root.setView(view, wparams, panelParentView, userId);

} catch (RuntimeException e) {

// BadTokenException or InvalidDisplayException, clean up.

if (index >= 0) {

removeViewLocked(index, true);

}

throw e;

}

}

}

// ...

}

最终,我们来到了ViewRootImpl的构造函数,在ViewRootImpl的构造函数中创建了Choreographer实例,并作为ViewRootImpl的成员变量持有了,这样ViewRootImpl就作为桥梁在App应用层和Android系统之间进行双向通信。接下来看下Choreographer是如何请求VSYNC信号以及如何分发VSYNC信号,最终实现App的UI不断刷新到屏幕上。

public final class ViewRootImpl implements ViewParent,

View.AttachInfo.Callbacks, ThreadedRenderer.DrawCallbacks,

AttachedSurfaceControl {

private static final String TAG = "ViewRootImpl";

public ViewRootImpl(@UiContext Context context, Display display, IWindowSession session,

boolean useSfChoreographer) {

// ...

// 创建Choreographer实例

mChoreographer = useSfChoreographer ? Choreographer.getSfInstance() : Choreographer.getInstance();

// ...

}

// ...

}

/**

* Coordinates the timing of animations, input and drawing.

* <p>

* The choreographer receives timing pulses (such as vertical synchronization)

* from the display subsystem then schedules work to occur as part of rendering

* the next display frame.

* </p><p>

* Applications typically interact with the choreographer indirectly using

* higher level abstractions in the animation framework or the view hierarchy.

* Here are some examples of things you can do using the higher-level APIs.

* </p>

* <ul>

* <li>To post an animation to be processed on a regular time basis synchronized with

* display frame rendering, use {@link android.animation.ValueAnimator#start}.</li>

* <li>To post a {@link Runnable} to be invoked once at the beginning of the next display

* frame, use {@link View#postOnAnimation}.</li>

* <li>To post a {@link Runnable} to be invoked once at the beginning of the next display

* frame after a delay, use {@link View#postOnAnimationDelayed}.</li>

* <li>To post a call to {@link View#invalidate()} to occur once at the beginning of the

* next display frame, use {@link View#postInvalidateOnAnimation()} or

* {@link View#postInvalidateOnAnimation(int, int, int, int)}.</li>

* <li>To ensure that the contents of a {@link View} scroll smoothly and are drawn in

* sync with display frame rendering, do nothing. This already happens automatically.

* {@link View#onDraw} will be called at the appropriate time.</li>

* </ul>

* <p>

* However, there are a few cases where you might want to use the functions of the

* choreographer directly in your application. Here are some examples.

* </p>

* <ul>

* <li>If your application does its rendering in a different thread, possibly using GL,

* or does not use the animation framework or view hierarchy at all

* and you want to ensure that it is appropriately synchronized with the display, then use

* {@link Choreographer#postFrameCallback}.</li>

* <li>... and that's about it.</li>

* </ul>

* <p>

* Each {@link Looper} thread has its own choreographer. Other threads can

* post callbacks to run on the choreographer but they will run on the {@link Looper}

* to which the choreographer belongs.

* </p>

*/

public final class Choreographer {

private static final String TAG = "Choreographer";

// Thread local storage for the choreographer.

private static final ThreadLocal<Choreographer> sThreadInstance =

new ThreadLocal<Choreographer>() {

@Override

protected Choreographer initialValue() {

Looper looper = Looper.myLooper();

if (looper == null) {

throw new IllegalStateException("The current thread must have a looper!");

}

Choreographer choreographer = new Choreographer(looper, VSYNC_SOURCE_APP);

if (looper == Looper.getMainLooper()) {

mMainInstance = choreographer;

}

return choreographer;

}

};

private Choreographer(Looper looper, int vsyncSource) {

mLooper = looper;

mHandlerandroid = new FrameHandler(looper);

mDisplayEventReceiver = USE_VSYNC

? new FrameDisplayEventReceiver(looper, vsyncSource)

: null;

mLastFrameTimeNanos = Long.MIN_VALUE;

mFrameIntervalNanos = (long)(1000000000 / getRefreshRate());

mCallbackQueues = new CallbackQueue[CALLBACK_LAST + 1];

for (int i = 0; i <= CALLBACK_LAST; i++) {

mCallbackQueues[i] = new CallbackQueue();

}

// b/68769804: For low FPS experiments.

setFPSDivisor(SystemProperties.getInt(ThreadedRenderer.DEBUG_FPS_DIVISOR, 1));

}

/**

* Gets the choreographer for the calling thread. Must be called from

* a thread that already has a {@link android.os.Looper} associated with it.

*

* @return The choreographer for this thread.

* @throws IllegalStateException if the thread does not have a looper.

*/

public static Choreographer getInstance() {

return sThreadInstance.get();

}

}

从上面的分析中,我们知道了Choreographer的创建时机是在Activity#onResume执行之后,可见Android系统这么设计是出于Activity是Android应用中承载UI的容器,只有容器创建之后,才需要创建Choreographer来调度VSYNC信号,最终开启一帧帧的界面渲染和刷新。

那么会不会在每启动一个Activity之后都会创建一个Choreographer实例呢?答案是不会的,因为从Choreographer的构造过程可以知道,Choreographer的创建是通过ThreadLocal实现的,所以Choreographer是线程单例的,所以主线程只会创建一个Choreographer实例。

那么是不是任何一个线程都可以创建Choreographer实例呢?答案是只有创建了Looper的线程才能创建Choreographer实例,原因是Choreographer会通过Looper进行线程切换,至于为什么线程切换将会在下面进行分析回答。

VSYNC信号的调度分发流程

下面我们结合源码分析下,Choreographer是如何调度VSYNC信号的,调度之后又是如何接收VSYNC信号的,接收到VSYNC信号之后又是怎么处理的。

首先,我们看下Choreographer的构造函数做了哪些事情来实现VSYNC信号的调度分发。首先,基于主线程的Looper创建了FrameHandler用于线程切换,保证是在主线程请求调度VSYNC信号以及在主线程处理接收到的VSYNC信号。接着,创建了FrameDisplayEventReceiver用于请求和接收VSYNC信号。最后,创建了CallbackQueue类型的数组,用于接收业务层投递的各种类型的任务。

private Choreographer(Looper looper, int vsyncSource) {

mLooper = looper;

// 负责线程切换

mHandler = new FrameHandler(looper);

// 负责请求和接收VSYNC信号

mDisplayEventReceiver = USE_VSYNC

? new FrameDisplayEventReceiver(looper, vsyncSource)

: null;

mLastFrameTimeNanos = Long.MIN_VALUE;

mFrameIntervalNanos = (long)(1000000000 / getRefreshRate());

// 存储业务提交的任务,四种任务类型

mCallbackQueues = new CallbackQueue[CALLBACK_LAST + 1];

for (int i = 0; i <= CALLBACK_LAST; i++) {

mCallbackQueues[i] = new CallbackQueue();

}

// b/68769804: For low FPS experiments.

setFPSDivisor(SystemProperties.getInt(ThreadedRenderer.DEBUG_FPS_DIVISOR, 1));

}

private final class CallbackQueue {

private CallbackRecord mHead;

// ...

}

// 链表结构

private static final class CallbackRecord {

public CallbackRecord next;

public long dueTime;

/** Runnable or FrameCallback or VsyncCallback object. */

public Object action;

/** Denotes the action type. */

public Object token;

// ...

}

下面结合源码看下FrameDisplayEventReceiver的创建过程,可以看到FrameDisplayEventReceiver继承自DisplayEventReceiver,并在构造函数中调用了DisplayEventReceiver的构造函数。因此,我们继续跟下DisplayEventReceiver的构造函数。

private final class FrameDisplayEventReceiver extends DisplayEventReceiver implements Runnable {

private boolean mHavePendingVsync;

private long mTimestampNanos;

private int mFrame;

private VsyncEventData mLastVsyncEventData = new VsyncEventData();

// 直接调用DisplayEventReceiver的构造函数

public FrameDisplayEventReceiver(Looper looper, int vsyncSource) {

super(looper, vsyncSource, 0);

}

@Override

public void onVsync(long timestampNanos, long physicalDisplayId, int frame, VsyncEventData vsyncEventData) {

try {

long now = System.nanoTime();

if (timestampNanos > now) {

timestampNanos = now;

}

if (mHavePendingVsync) {

Log.w(TAG, "Already have a pending vsync event. There should only be "

+ "one at a time.");

} else {

mHavePendingVsync = true;

}

mTimestampNanos = timestampNanos;

mFrame = frame;

mLastVsyncEventData = vsyncEventData;

// 发送异步消息到主线程

Message msg = Message.obtain(mHandler, this);

msg.setAsynchronous(true);

mHandler.sendMessageAtTime(msg, timestampNanos / TimeUtils.NANOS_PER_MS);

} finally {

Trace.traceEnd(Trace.TRACE_TAG_VIEW);

}

}

// 在主线程执行

@Override

public void run() {

mHavePendingVsync = false;

doFrame(mTimestampNanos, mFrame, mLastVsyncEventData);

}

}

DisplayEventReceiver的构造函数中将主线程的MessageQueue取出,之后调用了nativeInit方法并传递了主线程的MessageQueue,并将自身作为参数也一起传入nativeInit方法。

/**

* Provides a low-level mechanism for an application to receive display events

* such as vertical sync.

*

* The display event receive is NOT thread safe. Moreover, its methods must only

* be called www.devze.comon the Looper thread to which it is attached.

*

* @hide

*/

public abstract class DisplayEventReceiver {

/**

* Creates a display event receiver.

*

* @param looper The looper to use when invoking callbacks.

* @param vsyncSource The source of the vsync tick. Must be on of the VSYNC_SOURCE_* values.

* @param eventRegistration Which events to dispatch. Must be a bitfield consist of the

* EVENT_REGISTRATION_*_FLAG values.

*/

public DisplayEventReceiver(Looper looper, int vsyncSource, int eventRegistration) {

if (looper == null) {

throw new IllegalArgumentException("looper must not be null");

}

mMessageQueue = looper.getQueue();

mReceiverPtr = nativeInit(new WeakReference<DisplayEventReceiver>(this), mMessageQueue,

vsyncSource, eventRegistration);

}

private static native long nativeInit(WeakReference<DisplayEventReceiver> receiver,

MessageQueue messageQueue, int vsyncSource, int eventRegistration);

}

而nativeInit是一个native方法,具体逻辑是通过C++实现的,代码位于android_view_DisplayEventReceiver.cpp中。其中关键的部分是NativeDisplayEventReceiver的创建以及调用initialize方法进行初始化。

// frameworks/base/core/jni/android_view_DisplayEventReceiver.cpp

static jlong nativeInit(JNIEnv* env, jclass clazz, jobject receiverWeak, jobject vsyncEventDataWeak, jobject messageQueueObj, jint vsyncSource, jint eventRegistration, jlong layerHandle) {

// 获取native层的MessageQueue对象

sp<MessageQueue> messageQueue = android_os_MessageQueue_getMessageQueue(env, messageQueueObj);

if (messageQueue == NULL) {

jniThrowRuntimeException(env, "MessageQueue is not initialized.");

return 0;

}

// 创建native层的DisplayEventReceiver,即NativeDisplayEventReceiver

sp<NativeDisplayEventReceiver> receiver = new NativeDisplayEventReceiver(env, receiverWeak, vsyncEventDataWeak, messageQueue, vsyncSource, eventRegistration, layerHandle);

// 调用initialize进行初始化

status_t status = receiver->initialize();

if (status) {

String8 message;

message.appendFormat("Failed to initialize display event receiver. status=%d", status);

jniThrowRuntimeException(env, message.c_str());

return 0;

}

receiver->incStrong(gDisplayEventReceiverClassInfo.clazz); // retain a reference for the object

return reinterpret_cast<jlong>(receiver.get());

}

// 父类是DisplayEventDispatcher

class NativeDisplayEventReceiver : public DisplayEventDispatcher {

public:

NativeDisplayEventReceiver(JNIEnv* env, jobject receiverWeak, jobject vsyncEventDataWeak, const sp<MessageQueue>& messageQueue, jint vsyncSource, jint eventRegistration, jlong layerHandle);

void dispose();

protected:

virtual ~NativeDisplayEventReceiver();

private:

jobject mReceiverWeakGlobal;

jobject mVsyncEventDataWeakGlobal;

sp<MessageQueue> mMessageQueue;

void dispatchVsync(nsecs_t timestamp, PhysicalDisplayId displayId, uint32_t count, VsyncEventData vsyncEventData) override;

void dispatchHotplug(nsecs_t timestamp, PhysicalDisplayId displayId, bool connected) override;

void dispatchHotplugConnectionError(nsecs_t timestamp, int errorCode) override;

void dispatchModeChanged(nsecs_t timestamp, PhysicalDisplayId displayId, int32_t modeId,

nsecs_t renderPeriod) override;

void dispatchFrameRateOverrides(nsecs_t timestamp, PhysicalDisplayId displayId,

std::vector<FrameRateOverride> overrides) override;

void dispatchNullEvent(nsecs_t timestamp, PhysicalDisplayId displayId) override {}

void dispatchHdcpLevelsChanged(PhysicalDisplayId displayId, int connectedLevel,

int maxLevel) override;

};

NativeDisplayEventReceiver::NativeDisplayEventReceiver(JNIEnv* env, jobject receiverWeak,

jobject vsyncEventDataWeak,

const sp<MessageQueue>& messageQueue,

jint vsyncSource, jint eventRegistration,

jlong layerHandle)

// 父类构造函数

: DisplayEventDispatcher(

messageQueue->getLooper(),

static_cast<gui::ISurfaceComposer::VsyncSource>(vsyncSource),

static_cast<gui::ISurfaceComposer::EventRegistration>(eventRegistration),

layerHandle != 0 ? sp<IBinder>::fromExisting(reinterpret_cast<IBinder*>(layerHandle)) : nullptr

),

// Java层的receiver

mReceiverWeakGlobal(env->NewGlobalRef(receiverWeak)),

mVsyncEventDataWeakGlobal(env->NewGlobalRef(vsyncEventDataWeak)),

mMessageQueue(messageQueue) {

ALOGV("receiver %p ~ Initializing display event receiver.", this);

}

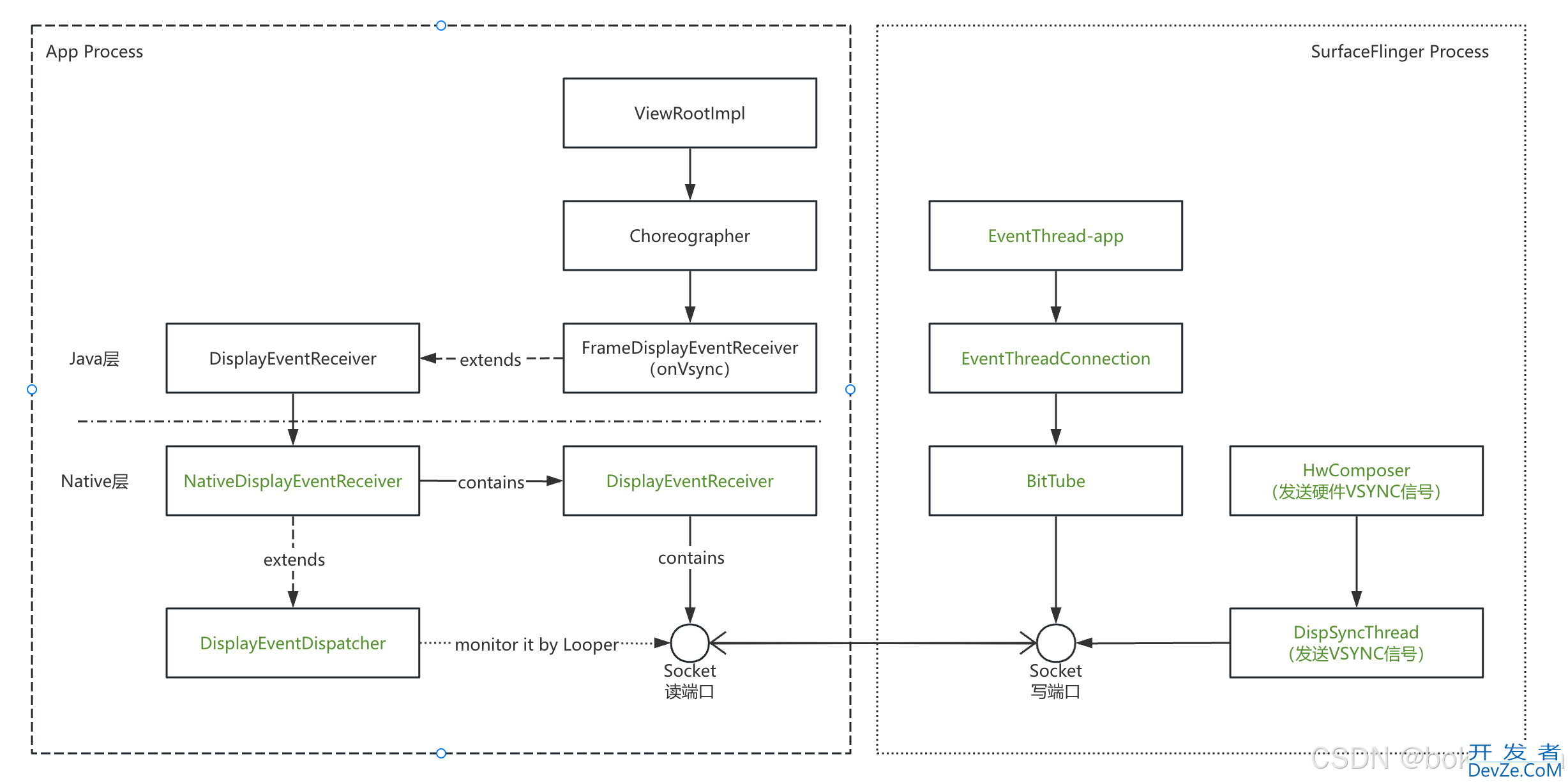

首先看下NativeDisplayEventReceiver对象的创建,NativeDisplayEventReceiver的父类是DisplayEventDispatcher。查看源码可以判断出DispljavascriptayEventDispatcher是用于分发VSYNC、Hotplug等信号的,而DisplayEventDispatcher内部会创建DisplayEventReceiver对象用于接收SurfaceFlinger进程发送过来的信号。

// frameworks/native/libs/gui/DisplayEventDispatcher.cpp

DisplayEventDispatcher::DisplayEventDispatcher(const sp<Looper>& looper,

gui::ISurfaceComposer::VsyncSource vsyncSource,

EventRegistrationFlags eventRegistration,

const sp<IBinder>& layerHandle)

: mLooper(looper),

mReceiver(vsyncSource, eventRegistration, layerHandle), // DisplayEventReceiver

mWaitingForVsync(false),

mLastVsyncCount(0),

mLastScheduleVsyncTime(0) {

ALOGV("dispatcher %p ~ Initializing display event dispatcher.", this);

}

// frameworks/native/libs/gui/DisplayEventReceiver.cpp

DisplayEventReceiver::DisplayEventReceiver(gui::ISurfaceComposer::VsyncSource vsyncSource, EventRegistrationFlags eventRegistration, const sp<IBinder>& layerHandle) {

// 获取SurfaceFlinger的代理对象

sp<gui::ISurfaceComposer> sf(ComposerServiceAIDL::getComposerService());

if (sf != nullptr) {

mEventConnection = nullptr;

// 创建一个与SurfaceFlinger进程中的EventTread-app线程的vsyncSource信号连接

binder::Status status = sf->createDisplayEventConnection(vsyncSource, static_cast<gui::ISurfaceComposer::EventRegistration>(eventRegistration.get()), layerHandle, &mEventConnection);

if (status.isOk() && mEventConnection != nullptr) {

// 创建成功之后,构造一个BitTube对象,并将上面创建好的连接的读端描述拷贝过来,用于后续VSYNC信号的监听

mDataChannel = std::make_unique<gui::BitTube>();

// 拷贝SurfaceFlinger进程中创建的Scoket读端描述符

status = mEventConnection->stealReceiveChannel(mDataChannel.get());

if (!status.isOk()) {

ALOGE("stealReceiveChannel failed: %s", status.toString8().c_str());

mInitError = std::make_optional<status_t>(status.transactionError());

mDataChannel.reset();

mEventConnection.clear();

}

} else {

ALOGE("DisplayEventConnection creation failed: status=%s", status.toString8().c_str());

}

}

}

// frameworks/native/services/surfaceflinger/Scheduler/EventThread.cpp

sp<EventThreadConnection> EventThread::createEventConnection(EventRegistrationFlags eventRegistration) const {

auto connection = sp<EventThreadConnection>::make(const_cast<EventThread*>(this), IPCThreadState::self()->getCallingUid(), eventRegistration);

if (FlagManager::getInstance().misc1()) {

const int policy = SCHED_FIFO;

connection->setMinSchedulerPolicy(policy, sched_get_priority_min(policy));

}

return connection;

}

// 创建了BitTube,内部创建了Socket

EventThreadConnection::EventThreadConnection(EventThread* eventThread, uid_t callingUid, EventRegistrationFlags eventRegistration)

: mOwnerUid(callingUid),

mEventRegistration(eventRegistration),

mEventThread(eventThread),

mChannel(gui::BitTube::DefaultSize) {}

从源码中可以看到,BitTube是用于接收SurfaceFlinger进程发送过来的信号的,从BitTube的类文件可以看出,BitTube内部是通过Socket来实现跨进程发送信号的。

// frameworks/native/libs/gui/BitTube.cpp

static const size_t DEFAULT_SOCKET_BUFFER_SIZE = 4 * 1024;

BitTube::BitTube(size_t bufsize) {

init(bufsize, bufsize);

}

void BitTube::init(size_t rcvbuf, size_t sndbuf) {

int sockets[2];

if (socketpair(AF_Unix, SOCK_SEQPACKET, 0, sockets) == 0) {

size_t size = DEFAULT_SOCKET_BUFFER_SIZE;

setsockopt(sockets[0], SOL_SOCKET, SO_RCVBUF, &rcvbuf, sizeof(rcvbuf));

setsockopt(sockets[1], SOL_SOCKET, SO_SNDBUF, &sndbuf, sizeof(sndbuf));

// since we don't use the "return channel", we keep it small...

setsockopt(sockets[0], SOL_SOCKET, SO_SNDBUF, &size, sizeof(size));

setsockopt(sockets[1], SOL_SOCKET, SO_RCVBUF, &size, sizeof(size));

fcntl(sockets[0], F_SETFL, O_NONblock);

fcntl(sockets[1], F_SETFL, O_NONBLOCK);

mReceiveFd.reset(sockets[0]);

mSendFd.reset(sockets[1]);

} else {

mReceiveFd.reset();

ALOGE("BitTube: pipe creation failed (%s)", strerror(errno));

}

}

base::unique_fd BitTube::moveReceiveFd() {

return std::move(mReceiveFd);

}

总结一下,Choreographer的创建流程:

VSYNC信号的请求

对于刷新率为60Hz的屏幕来说,一般是每16.67ms产生一个VSYNC信号,但是每个App进程不一定会每个VSYNC信号都会接收,而是根据上层业务的实际需要进行VSYNC信号的监听和接收。这样设计的好处是可以按需触发App进程的渲染流程,降低不必要的渲染所带来的功耗。

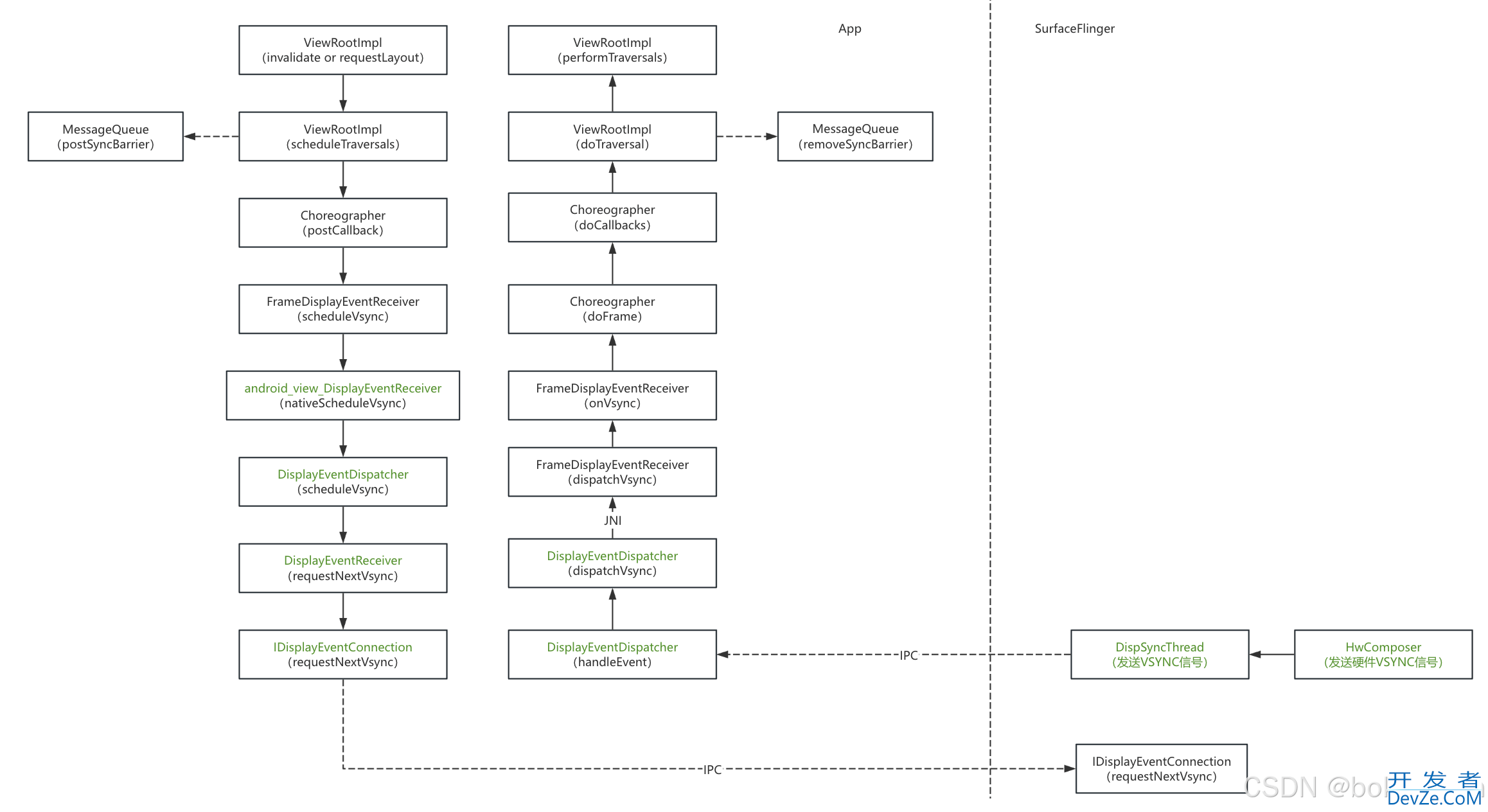

上层业务一般会通过validate或者requestLayout方法来发起一次绘制请求,最终这个绘制请求会被转换成CallbackRecord放入对应类型的CallbackQueue中。

public final class Choreographer {

// ...

private void postCallbackDelayedInternal(int callbackType,

Object action, Object token, long delayMillis) {

synchronized (mLock) {

final long now = SystemClock.uptimeMillis();

// 计算任务执行的具体时间戳

final long dueTime = now + delayMillis;

mCallbackQueues[callbackType].addCallbackLocked(dueTime, action, token);

if (dueTime <= now) { // 如果不需要延迟执行的话,则立即请求调度VSYNC信号

scheduleFrameLocked(now);

} else { // 否则通过延迟消息来请求调度VSYNC信号

Message msg = mHandler.obtainMessage(MSG_DO_SCHEDULE_CALLBACK, action);

msg.arg1 = callbackType;

msg.setAsynchronous(true);

mHandler.sendMessageAtTime(msg, dueTime);

}

}

}

private void scheduleFrameLocked(long now) {

if (!mFrameScheduled) {

mFrameScheduled = true;

if (USE_VSYNC) {

// 检查当前线程是否为主线程,如果是主线程,直接请求调度VSYNC信号,否则向主线程发送异步消息来请求调度VSYNC信号

if (isRunningOnLooperThreadLocked()) {

scheduleVsyncLocked();

} else {

Message msg = mHandler.obtainMessage(MSG_DO_SCHEDULE_VSYNC);

msg.setAsynchronous(true);

mHandler.sendMessageAtFrontOfQueue(msg);

}

} else {

final long nextFrameTime = Math.max(

mLastFrameTimeNanos / TimeUtils.NANOS_PER_MS + sFrameDelay, now);

if (DEBUG_FRAMES) {

Log.d(TAG, "Scheduling next frame in " + (nextFrameTime - now) + " ms.");

}

Message msg = mHandler.obtainMessage(MSG_DO_FRAME);

msg.setAsynchronous(true);

mHandler.sendMessageAtTime(msg, nextFrameTime);

}

}

}

@UnsupportedAppUsage(maxTargetSdk = Build.VERSION_CODES.R, trackingBug = 170729553)

private void scheduleVsyncLocked() {

try {

Trace.traceBegin(Trace.TRACE_TAG_VIEW, "Choreographer#scheduleVsyncLocked");

// 通过调用FrameDisplayEventReceiver的scheduleVsync方法请求VSYNC信号

mDisplayEventReceiver.scheduleVsync();

} finally {

Trace.traceEnd(Trace.TRACE_TAG_VIEW);

}

}

// ...

}

最终调用了native方法向SurfaceFlinger进程进行通信,请求分发VSYNC信号到App进程。之前创建Choreographer的过程中,创建了DisplayEventReceiver并通过JNI调用到了native层,在native层创建了NativeDisplayEventReceiver对象之后,将其返回了Java层,并保存在了Java层的DisplayEventReceiver对象中。Java层通过JNI调用到native层并将之前创建的NativeDisplayEventReceiver指针传回了native层,这样就可以在native层找到之前创建好的NativeDisplayEventReceiver对象,并调用其scheduleVsync方法,最终通过mEventConnection完成跨进程请求,

// android.view.DisplayEventReceiver#scheduleVsync

@UnsupportedAppUsage

public void scheduleVsync() {

if (mReceiverPtr == 0) {

Log.w(TAG, "Attempted to schedule a vertical sync pulse but the display event "

+ "receiver has already been disposed.");

} else {

nativeScheduleVsync(mReceiverPtr);

}

}

// frameworks/base/core/jni/android_view_DisplayEventReceiver.cpp

static void nativeScheduleVsync(JNIEnv* env, jclass clazz, jlong receiverPtr) {

sp<NativeDisplayEventReceiver> receiver =

reinterpret_cast<NativeDisplayEventReceiver*>(receiverPtr);

status_t status = receiver->scheduleVsync();

if (status) {

String8 message;

message.appendFormat("Failed to schedule next vertical sync pulse. status=%d", status);

jniThrowRuntimeException(env, message.c_str());

}

}

// frameworks/native/libs/gui/DisplayEventDispatcher.cpp

status_t DisplayEventDispatcher::scheduleVsync() {

if (!mWaitingForVsync) {

ALOGV("dispatcher %p ~ Scheduling vsync.", this);

// Drain all pending events.

nsecs_t vsyncTimestamp;

PhysicalDisplayId vsyncDisplayId;

uint32_t vsyncCount;

VsyncEventData vsyncEventData;

if (processPendingEvents(&vsyncTimestamp, &vsyncDisplayId, &vsyncCount, &vsyncEventData)) {

ALOGE("dispatcher %p ~ last event processed while scheduling was for %" PRId64 "", this,

ns2ms(static_cast<nsecs_t>(vsyncTimestamp)));

}

// 请求下一个VSYNC信号

status_t status = mReceiver.requestNextVsync();

if (status) {

ALOGW("Failed to request next vsync, status=%d", status);

return status;

}

mWaitingForVsync = true;

mLastScheduleVsyncTime = systemTime(SYSTEM_TIME_MONOTONIC);

}

return OK;

}

// frameworks/native/libs/gui/DisplayEventReceiver.cpp

status_t DisplayEventReceiver::requestNextVsync() {

if (mEventConnection != nullptr) {

mEventConnection->requestNextVsync();

return NO_ERROR;

}

return mInitError.has_value() ? mInitError.value() : NO_INIT;

}

VSYNC信号的分发

当SurfaceFlinger进程通过Socket通知App进程VSYNC信号到达之后,App进程的handleEvent方法将会被调用,最终通过JNI调用到Java层的FrameDisplayEventReceiver#dispatchVsync方法。

// frameworks/native/libs/gui/DisplayEventDispatcher.cpp

int DisplayEventDispatcher::handleEvent(int, int events, void*) {

if (events & (Looper::EVENT_ERROR | Looper::EVENT_HANGUP)) {

return 0; // remove the callback

}

if (!(events & Looper::EVENT_INPUT)) {

return 1; // keep the callback

}

// Drain all pending events, keep the last vsync.

nsecs_t vsyncTimestamp;

PhysicalDisplayId vsyncDisplayId;

uint32_t vsyncCount;

VsyncEventData vsyncEventData;

if (processPendingEvents(&vsyncTimestamp, &vsyncDisplayId, &vsyncCount, &vsyncEventData)) {

mWaitingForVsync = false;

mLastVsyncCount = vsyncCount;

dispatchVsync(vsyncTimestamp, vsyncDisplayId, vsyncCount, vsyncEventData);

}

if (mWaitingForVsync) {

const nsecs_t currentTime = systemTime(SYSTEM_TIME_MONOTONIC);

const nsecs_t vsyncScheduleDelay = currentTime - mLastScheduleVsyncTime;

if (vsyncScheduleDelay > WAITING_FOR_VSYNC_TIMEOUT) {

mWaitingForVsync = false;

dispatchVsync(currentTime, vsyncDisplayId /* displayId is not used */,

++mLastVsyncCount, vsyncEventData /* empty data */);

}

}

return 1; // keep the callback

}

// frameworks/base/core/jni/android_view_DisplayEventReceiver.cpp

void NativeDisplayEventReceiver::dispatchVsync(nsecs_t timestamp, PhysicalDisplayId displayId, uint32_t count, VsyncEventData vsyncEventData) {

JNIEnv* env = AndroidRuntime::getJNIEnv();

ScopedLocalRef<jobject> receiverObj(env, GetReferent(env, mReceiverWeakGlobal));

ScopedLocalRef<jobject> vsyncEventDataObj(env, GetReferent(env, mVsyncEventDataWeakGlobal));

if (receiverObj.get() && vsyncEventDataObj.get()) {

env->SetIntField(vsyncEventDataObj.get(), gDisplayEventReceiverClassInfo.vsyncEventDataClassInfo.preferredFrameTimelineIndex, syncEventData.preferredFrameTimelineIndex);

env->SetIntField(vsyncEventDataObj.get(), gDisplayEventReceiverClassInfo.vsyncEventDataClassInfo.frameTimelinesLength, vsyncEventData.frameTimelinesLength);

env->SetLongField(vsyncEventDataObj.get(), gDisplayEventReceiverClassInfo.vsyncEventDataClassInfo.frameInterval, vsyncEventData.frameInterval);

ScopedLocalRef<jobjectArray> frameTimelinesObj(env, reinterpret_cast<jobjectArray>(env->GetObjectField(vsyncEventDataObj.get(), gDisplayEventReceiverClassInfo.vsyncEventDataClassInfo.frameTimelines)));

for (size_t i = 0; i < vsyncEventData.frameTimelinesLength; i++) {

VsyncEventData::FrameTimeline& frameTimeline = vsyncEventData.frameTimelines[i];

ScopedLocalRef<jobject>

frameTimelineObj(env, env->GetObjectArrayElement(frameTimelinesObj.get(), i));

env->SetLongField(frameTimelineObj.get(),

gDisplayEventReceiverClassInfo.frameTimelineClassInfo.vsyncId,

frameTimeline.vsyncId);

env->SetLongField(frameTimelineObj.get(),

gDisplayEventReceiverClassInfo.frameTimelineClassInfo

.expectedPresentationTime,

frameTimeline.expectedPresentationTime);

env->SetLongField(frameTimelineObj.get(),

gDisplayEventReceiverClassInfo.frameTimelineClassInfo.deadline,

frameTimeline.deadlineTimestamp);

}

// 最终调用到了Java层的dispatchVsync

env->CallVoidMethod(receiverObj.get(), gDisplayEventReceiverClassInfo.dispatchVsync, timestamp, displayId.value, count);

ALOGV("receiver %p ~ Returned from vsync handler.", this);

}

mMessageQueue->raiseAndClearException(env, "dispatchVsync");

}

// android.vihttp://www.devze.comew.DisplayEventReceiver

// Called from native code.

@SuppressWarnings("unused")

private void dispatchVsync(long timestampNanos, long physicalDisplayId, int frame,

VsyncEventData vsyncEventData) {

onVsync(timestampNanos, physicalDisplayId, frame, vsyncEventData);

}

// android.view.Choreographer.FrameDisplayEventReceiver

@Override

public void onVsync(long timestampNanos, long physicalDisplayId, int frame, VsyncEventData vsyncEventData) {

try {

long now = System.nanoTime();

if (timestampNanos > now) {

timestampNanos = now;

}

if (mHavePendingVsync) {

Log.w(TAG, "Already have a pending vsync event. There should only be "

+ "one at a time.");

} else {

mHavePendingVsync = true;

}

mTimestampNanos = timestampNanos;

mFrame = frame;

mLastVsyncEventData = vsyncEventData;

Message msg = Message.obtain(mHandler, this);

msg.setAsynchronous(true); // 异步消息,利用之前插入的同步屏障来加速消息的处理

mHandler.sendMessageAtTime(msg, timestampNanos / TimeUtils.NANOS_PER_MS);

} finally {

Trace.traceEnd(Trace.TRACE_TAG_VIEW);

}

}

@Override

public void run() {

mHavePendingVsync = false;

doFrame(mTimestampNanos, mFrame, mLastVsyncEventData);

}

最终调用到了Choreographer#doFrame方法,到了这里就开始了新的一帧的处理,开始下一帧的数据准备工作。

VSYNC信号的处理

App进程收到VSYNC信号之后就会调用doFrame方法开始新的一帧数据的准备工作,其中还会计算卡顿时间,即VSYNC信号到达之后多久才被主线程处理,等待时间过长会导致无法在一帧时间内完成数据准备的工作,最终导致用户看到的视觉效果不够流畅。

// android.view.Choreographer

void doFrame(long frameTimeNanos, int frame, DisplayEventReceiver.VsyncEventData vsyncEventData) {

final long startNanos;

final long frameIntervalNanos = vsyncEventData.frameInterval;

try {

FrameData frameData = new FrameData(frameTimeNanos, vsyncEventData);

synchronized (mLock) {

if (!mFrameScheduled) {

traceMessage("Frame not scheduled");

return; // no work to do

}

long intendedFrameTimeNanos = frameTimeNanos;

startNanos = System.nanoTime();

// frameTimeNanos是SurfaceFlinger传递给来的时间戳,可能会被校准为App进程接收到VSYNC信号的时间戳

// jitterNanos包含了Handler处理消息的耗时,即异步消息被处理之前,主线程还在处理其他消息所占用的时间,如果这个时间过长会导致卡顿

final long jitterNanos = startNanos - frameTimeNanos;

if (jitterNanos >= frameIntervalNanos) {

long lastFrameOffset = 0;

if (frameIntervalNanos == 0) {

Log.i(TAG, "Vsync data empty due to timeout");

} else {

lastFrameOffset = jitterNanos % frameIntervalNanos;

final long skippedFrames = jitterNanos / frameIntervalNanos;

if (skippedFrames >= SKIPPED_FRAME_WARNING_LIMIT) {

Log.i(TAG, "Skipped " + skippedFrames + " frames! "

+ "The application may be doing too much work on its main "

+ "thread.");

}

if (DEBUG_JANK) {

Log.d(TAG, "Missed vsync by " + (jitterNanos * 0.000001f) + " ms "

+ "which is more than the frame interval of "

+ (frameIntervalNanos * 0.000001f) + " ms! "

+ "Skipping " + skippedFrames + " frames and setting frame "

+ "time to " + (lastFrameOffset * 0.000001f)

+ " ms in the past.");

}

}

frameTimeNanos = startNanos - lastFrameOffset;

frameData.updateFrameData(frameTimeNanos);

}

if (frameTimeNanos < mLastFrameTimeNanos) {

if (DEBUG_JANK) {

Log.d(TAG, "Frame time appears to be going backwards. May be due to a "

+ "previously skipped frame. Waiting for next vsync.");

}

traceMessage("Frame time goes backward");

scheduleVsyncLocked();

return;

}

if (mFPSDivisor > 1) {

long timeSinceVsync = frameTimeNanos - mLastFrameTimeNanos;

if (timeSinceVsync < (frameIntervalNanos * mFPSDivisor) && timeSinceVsync > 0) {

traceMessage("Frame skipped due to FPSDivisor");

scheduleVsyncLocked();

return;

}

}

mFrameInfo.setVsync(intendedFrameTimeNanos, frameTimeNanos,

vsyncEventData.preferredFrameTimeline().vsyncId,

vsyncEventData.preferredFrameTimeline().deadline, startNanos,

vsyncEventData.frameInterval);

mFrameScheduled = false;

mLastFrameTimeNanos = frameTimeNanos;

mLastFrameIntervalNanos = frameIntervalNanos;

mLastVsyncEventData = vsyncEventData;

}

// 开始执行各种类型的Callback

AnimationUtils.lockAnimationClock(frameTimeNanos / TimeUtils.NANOS_PER_MS);

mFrameInfo.markInputHandlingStart();

doCallbacks(Choreographer.CALLBACK_INPUT, frameData, frameIntervalNanos);

mFrameInfo.markAnimationsStart();

doCallbacks(Choreographer.CALLBACK_ANIMATION, frameData, frameIntervalNanos);

doCallbacks(Choreographer.CALLBACK_INSETS_ANIMATION, frameData,

frameIntervalNanos);

mFrameInfo.markPerformTraversalsStart();

doCallbacks(Choreographer.CALLBACK_TRAVERSAL, frameData, frameIntervalNanos);

doCallbacks(Choreographer.CALLBACK_COMMIT, frameData, frameIntervalNanos);

} finally {

AnimationUtils.unlockAnimationClock();

Trace.traceEnd(Trace.TRACE_TAG_VIEW);

}

}

接着,就会将之前业务提交的各种类型的CallbackRecord,主要分为:

- CALLBACK_INPUT:输入类型,比如屏幕触摸事件,最先执行;

- CALLBACK_ANIMATION:动画类型,比如属性动画;

- CALLBACK_INSETS_ANIMATION:背景更新动画;

- CALLBACK_TRAVERSAL:布局绘制类型,View的测量、布局和绘制等;

- CALLBACK_COMMIT:提交绘制数据;

按照顺序执行完所有的CallbackRecord之后,App进程的绘制任务就完成了,最终数据提交到GraphicBuffer中,最终调度sf类型的VSYNC信号,最后由SurfaceFlinger完成数据合成和送显。

总结一下,VSYNC信号的分发处理流程:

总结

整个Choreographer工作机制作为App进程和SurfaceFlinger进程的协调机制,承接App进程的业务刷新UI的请求,统一调度VSYNC信号,将UI渲染任务同步到 VSYNC 信号的时间线上。同时作为中转站来分发VSYNC信号,并处理上层业务的刷新请求。按照 VSYNC 信号的周期有规律地准备每一帧数据,并通过SurfaceFlinger进程完成合成上屏。

到此这篇关于浅析Android中的Choreographer工作原理的文章就介绍到这了,更多相关Android Choreographer工作原理内容请搜索编程客栈(www.devze.com)以前的文章或继续浏览下面的相关文章希望大家以后多多支持编程客栈(www.devze.com)!

加载中,请稍侯......

加载中,请稍侯......

精彩评论