目录

- 0. 简介

- 1. 系统调用

- 1.1 场景

- 1.2 陷入系统调用

- 1.3 从系统调用恢复

- 2. 小结

0. 简介

上篇博客,我们分析了Go调度器中的抢占策略,这篇,我们将分析一下,在系统调用时发生的调度行为。

1. 系统调用

下面,我们将以一个简单的文件打开的系统调用,来分析一下Go调度器在系统调用时做了什么。

1.1 场景

package main

import (

"fmt"

"io/ioutil"

"os"

)

func main() {

f, err := os.Open("./file")

if err != nil {

panic(err)

}

defer f.Close()

content, err := ioutil.ReadAll(f)

if err != nil {

panic(err)

}

fmt.Println(string(content))

}

如上简单的代码,读取一个名为file的本地文件,然后打印编程其数据,我们通过汇编代码来分析一下其调用过程:

$ go build -gcflags "-N -l" -o main main.go

$ objdump -d main >> main.i

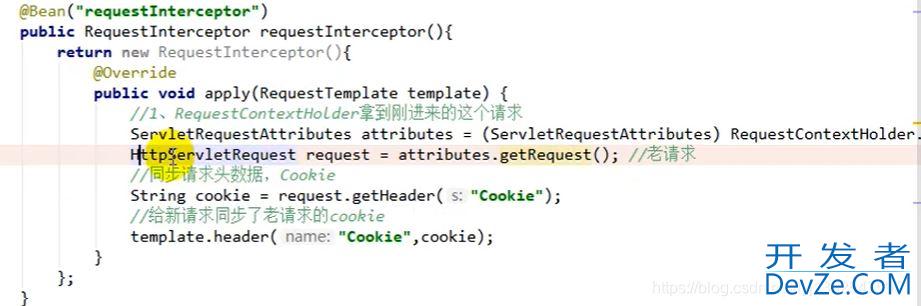

可以发现,在main.i中,从main.main函数,对于文件Open操作的调用关系为:main.main -> os.Open -> os.openFile -> os.openFileNolog -> syscall.openat -> syscall.Syscall6.abi0 -> runtime.entersyscall.abi0,而Syscall6的汇编如下:

TEXT ·Syscall6(SB),NOSPLIT,$0-80

CALL runtime·entersyscall(SB) MOVQ a1+8(FP), DI MOVQ a2+16(FP), SI MOVQ a3+24(FP), DX MOVQ a4+32(FP), R10 MOVQ a5+40(FP), R8 MOVQ a6+48(FP), R9 MOVQ trap+0(FP), AX // syscall entry SYSCALL CMPQ AX, $0xfffffffffffff001 JLS ok6 MOVQ $-1, r1+56(FP) MOVQ $0, r2+64(FP) NEGQ AX MOVQ AX, err+72(FP) CALL runtime·exitsyscall(SB) RETok6: MOVQ AX, r1+56(FP) MOVQ DX, r2+64(FP) MOVQ $0, err+72(FP) CALL runtime·exitsyscall(SB) RET

1.2 陷入系统调用

可以发现,系统调用最终会进入到runtime.entersyscall函数:

func entersyscall() {

reentersyscall(getcallerpc(), get编程callersp())

}

runtime.entersyscall函数会调用runtime.reentersyscall:

func reentersyscall(pc, sp uintptr) {

_g_ := getg()

// Disable preemption because during this function g is in Gsyscall status,

// but can have inconsistent g->sched, do not let GC observe it.

_g_.m.locks++

// Entersyscall must not call any function that might split/grow the stack.

// (See details in comment above.)

// Catch calls that might, by replacing the stack guard with something that

// will trip any stack check and leaving a flag to tell newstack to die.

_g_.stackguard0 = stackPreempt

_g_.throwsplit = true

// Leave SP around for GC and traceback.

save(pc, sp) // 保存pc和sp

_g_.syscallsp = sp

_g_.syscallpc = pc

casgstatus(_g_, _Grunning, _Gsyscall)

if _g_.syscallsp < _g_.stack.lo || _g_.stack.hi < _g_.syscallsp {

systemstack(func() {

print("entersyscall编程客栈 inconsistent ", hex(_g_.syscallsp), " [", hex(_g_.stack.lo), ",", hex(_g_.stack.hi), "]\n")

throw("entersyscall")

})

}

if trace.enabled {

systemstack(traceGoSysCall)

// systemstack itself clobbers g.sched.{pc,sp} and we might

// need them later when the G is genuinely blocked in a

// syscall

save(pc, sp)

}

ijavascriptf atomic.Load(&sched.sysmonwait) != 0 {

systemstack(entersyscall_sysmon)

save(pc, sp)

}

if _g_.m.p.ptr().runSafePointFn != 0 {

// runSafePointFn may stack split if run on this stack

systemstack(runSafePointFn)

save(pc, sp)

}

// 一下解绑P和M

_g_.m.syscalltick = _g_.m.p.ptr().syscalltick

_g_.sysblocktraced = true

pp := _g_.m.p.ptr()

pp.m = 0

_g_.m.oldp.set(pp) // 存储一下旧P

_g_.m.p = 0

atomic.Store(&pp.status, _Psyscall)

if sched.gcwaiting != 0 {

systemstack(entersyscall_gcwait)

save(pc, sp)

}

_g_.m.locks--

}

可以发现,runtime.reentersyscall除了做一些保障性的工作外,最重要的是做了以下三件事:

- 保存当前

goroutine的PC和栈指针SP的内容; - 将当前

goroutine的状态置为_Gsyscall; - 将当前P的状态置为

_Psyscall,并解绑P和M,让当前M陷入内核的系统调用中,P被释放,可以被其他找工作的M找到并且执行剩下的goroutine。

1.3 从系统调用恢复

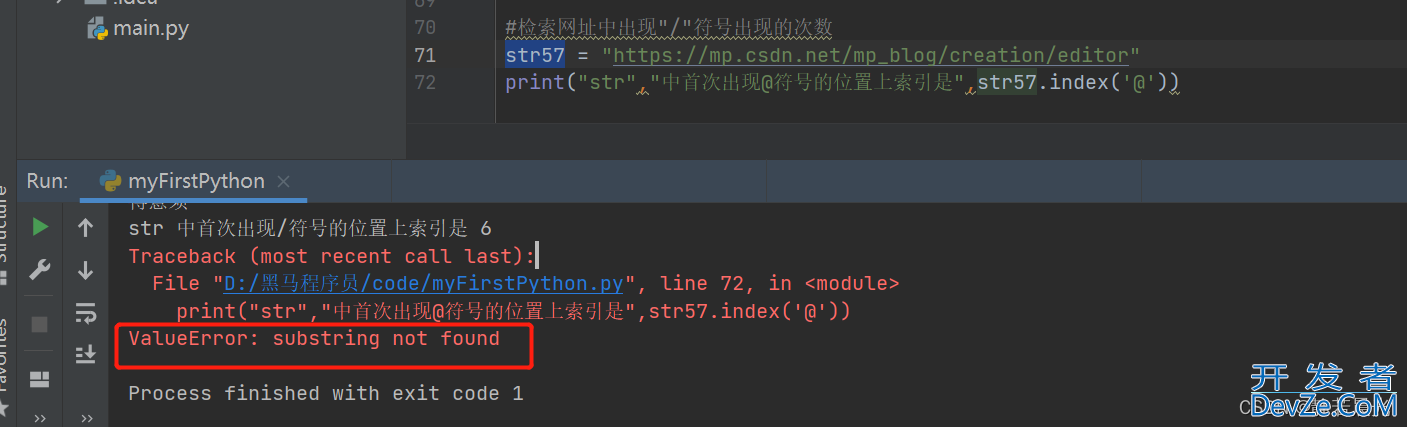

func exitsyscall() {

_g_ := getg()

_g_.m.locks++ // see comment in entersyscall

if getcallersp() > _g_.syscallsp {

throw("exitsyscall: syscall frame is no longer valid")

}

_g_.waitsince = 0

oldp := _开发者_C学习g_.m.oldp.ptr() // 拿到开始存储的旧P

_g_.m.oldp = 0

if exitsyscallfast(oldp) {

if trace.enabled {

if oldp != _g_.m.p.ptr() || _g_.m.syscalltick != _g_.m.p.ptr().syscalltick {

systemstack(traceGoStart)

}

}

// There's a cpu for us, so we can run.

_g_.m.p.ptr().syscalltick++

// We need to cas the status and scan before resuming...

casgstatus(_g_, _Gsyscall, _Grunning)

...

return

}

...

// Call the scheduler.

mcall(exitsyscall0)

// Scheduler returned, so we're allowed to run now.

// Delete the syscallsp information that we left for

// the garbage collector during the system call.

// Must wait until now because until gosched returns

// we don't know for sure that the garbage collector

// is not running.

_g_.syscallsp = 0

_g_.m.p.ptr().syscalltick++

_g_.throwsplit = false

}

其中,exitsyscallfast函数有以下个分支:

- 如果旧的P还没有被其他M占用,依旧处于

_Psyscall状态,那么直接通过wirep函数获取这个P,返回true; - 如果旧的P被占用了,那么调用

exitsyscallfast_pidle去获取空闲的P来执行,返回true; - 如果没有空闲的P,则返回false;

//go:nosplit

func exitsyscallfast(oldp *p) bool {

_g_ := getg()

// Freezetheworld sets stopwait but does not retake P's.

if sched.stopwait == freezeStopWait {

return false

}

// 如果上一个P没有被其他M占用,还处于_Psyscall状态,那么直接通过wirep函数获取此P

// Try to re-acquire the last P.

if oldp != nil && oldp.status == _Psyscall && atomic.Cas(&oldp.status, _Psyscall, _Pidle) {

// There's a cpu for us, so we can run.

wirep(oldp)

exitsyscallfast_reacquired()

return true

}

// Try to get any other idle P.

if sched.pidle != 0 {

var ok bool

systemstack(func() {

ok = exitsyscallfast_pidle()

if ok && trace.enabled {

if oldp != nil {

// Wait till traceGoSysBlock event is emitted.

// This ensures consistency of the trace (the goroutine is started after it is blocked).

for oldp.syscalltick == _g_.m.syscalltick {

android osyield()

}

}

traceGoSysExit(0)

}

})

if ok {

return true

}

}

return false

}

当exitsyscallfast函数返回false后,则会调用exitsyscall0函数去处理:

func exitsyscall0(gp *g) {

casgstatus(gp, _Gsyscall, _Grunnable)

dropg() // 因为当前m没有找到p,所以先解开g和m

lock(&sched.lock)

var _p_ *p

if schedEnabled(gp) {

_p_ = pidleget() // 还是尝试找一下有没有空闲的p

}

var locked bool

if _p_ == nil { // 如果还是没有空闲p,那么把g扔到全局队列去等待调度

globrunqput(gp)

// Below, we stoplockedm if gp is locked. globrunqput releases

// ownership of gp, so we must check if gp is locked prior to

// committing the release by unlocking sched.lock, otherwise we

// could race with another M transitioning gp from unlocked to

// locked.

locked = gp.lockedm != 0

} else if atomic.Load(&sched.sysmonwait) != 0 {

atomic.Store(&sched.sysmonwait, 0)

notewakeup(&sched.sysmonnote)

}

unlock(&sched.lock)

if _p_ != nil { // 如果找到了空闲p,那么就去执行,这个分支永远不会返回

acquirep(_p_)

execute(gp, false) // Never returns.

}

if locked {

// Wait until another thread schedules gp and so m again.

//

// N.B. lockedm must be this M, as this g was running on this M

// before entersyscall.

stoplockedm()

execute(gp, false) // Never returns.

}

stopm() // 这里还是没有找到空闲p的条件,停止这个m,因为没有p,所以m应该要开始找工作了

schedule() // Never returns. // 通过schedule函数进行调度

}

exitsyscall0函数还是会尝试找一个空闲的P,没有的话就把goroutine扔到全局队列,然后停止这个M,并且调用schedule函数等待调度;如果找到了空闲P,则会利用这个P去执行此goroutine。

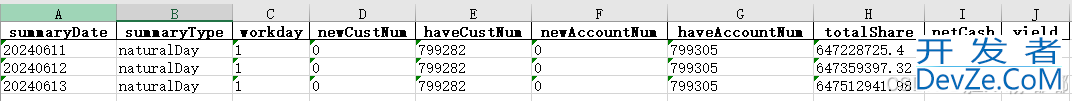

2. 小结

通过以上分析,可以发现goroutine有关系统调用的调度还是比较简单的:

- 在发生系统调用时会将此

goroutine设置为_Gsyscall状态; - 并将P设置为

_Psyscall状态,并且解绑M和P,使得这个P可以去执行其他的goroutine,而M就陷入系统内核调用中了; - 当该M从内核调用中恢复到用户态时,会优先去获取原来的旧P,如果该旧P还未被其他M占用,则利用该P继续执行本

goroutine; - 如果没有获取到旧P,那么会尝试去P的空闲列表获取一个P来执行;

- 如果空闲列表中没有获取到P,就会把

goroutine扔到全局队列中,等到继续执行。

可以发现,如果系统发生着很频繁的系统调用,很可能会产生很多的M,在IO密集型的场景下,甚至会发生线程数超过10000的panic事件。而Go团队为此也进行了很多努力,下一节我们将介绍的网络轮询器将介绍,至少在网络IO密集型场景,Go SDK是怎么优化的。

以上就是Go调度器学习之系统调用详解的详细内容,更多关于Go调度器 系统调用的资料请关注我们其它相关文章!

加载中,请稍侯......

加载中,请稍侯......

精彩评论