I've previously asked how long it takes for a winning combination to appear on Google's Web Optimizer, but now I have another weird problem during an A/B test:

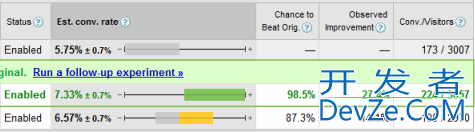

For the past two days has Google announced that there was a "High Confidence Winner" that had a 98.5% chance of beating the original variation by 27.4%. Great!

I decided to leave it running to make absolutely sure, but something weird happened: Today Google is saying that they "haven't collected enough data yet to show any significant results" (as shown below). Sure, the figures have changed slightly, but they're still very high: 96.6% chance of beating the original by 22%.

So, why is Google not so sure now?

How could it have gone from having a statistic开发者_如何学Cally significant "High Confidence" winner, to not having enough data to calculate one? Are my numbers too tiny for Google to be absolutely sure or something?

Thanks for any insights!

How could it have gone from having a statistically significant "High Confidence" winner, to not having enough data to calculate one?

With all statistics tests there is what's called a p-value, which is the probablity of obtaining the observed result by random chance, assuming that there is no difference between what's being tested. So when you run a test, you want a small p-value so that you can be confident with your results.

So with GWO must have a p-value between 1.5% and 3.4% (I'm guessing it's 2.5%, atleast in this case, it might be that it depends on the number of combinations)

So when (100% - chance to beat %) > p-value, then GWO will say that it has not collected enough information, and if a combination has a (100% - chance to beat %) < p-value then a winner is found. Obviously if that line is just crossed, then it could easily go back with a little more data.

To summerize, you shouldn't be checking the results frequently, you should setup a test, then ignore it for a long while then check the results.

Are my numbers too tiny for Google to be absolutely sure or something?

No

![Interactive visualization of a graph in python [closed]](https://www.devze.com/res/2023/04-10/09/92d32fe8c0d22fb96bd6f6e8b7d1f457.gif)

加载中,请稍侯......

加载中,请稍侯......

精彩评论