I work for a medical lab company. They need to be able to search through all their client data. So far they have a few years in storage about 4 million pieces of paper, and they are adding 10,000 pages per day. For data that is 6 months old, they need to access it about 10-20 times per day. They are deciding whet开发者_如何转开发her to spend 80k on a scanning system, and have the secretaries scan everything in house, or whether to hire a company like iron mountain to do this. Iron mountain will charge around 8cents per page, which adds up to around $300k for the amount of paper we have, plus a bunch of more money every day for the 10,000 sheets.

I am thinking that perhaps I can build a database and do all the scanning in house.

- What are those systems that are used to scan checks and mail and they read really messy hand writing really well?

- has anyone had experience building a database with a bunch of OCR'd searchable documents? What tools should I use for my problem?

- Can you recommend the best OCR libraries?

- As a programmer, what would you do to solve this problem?

FYI none of the answers below answer my questions well enough

Having worked at a medical office doing data entry, OCR will almost certainly not work. Our forms had special text boxes, with a separate box for each letter, and even for that the software was correct only about 75% of the time. There were some forms which allowed freeform writing, but the result was universally gibberish.

I would recommend going the meta-data route; scan everything, but instead of trying to OCR each form, just store it as an image and add meta-data tags.

My thinking is this: the goal of OCR in this case is to enable all forms to be read from the computer, thus making data retrieval simpler. However, you don't really need OCR to do that here, all you need to do is find some way which would allow someone to find a form really fast, and get the right info off the form. As such, even if you store each form as an image, adding the right meta-data tags would allow you to retrieve whatever you need whenever you need it, and the person running the search could either read it right off the stored form, or print it and read it that way.

EDIT: One fairly simple way of executing this plan could be to use a simple database scheme, where each image is stored as a single field. Each row could then contain something like the following, depending on your needs:

- image name

- patient ID

- date of visit

- ...

Basically, think of all the ways you'd want to search for a given file, and make sure that it's included as a field. Do you look up patients by Patient ID? Include that. Date of visit? Same. If you aren't familiar with designing a database around search requirements, I suggest hiring a developer with database design skills; you can end up with a very powerful, yet quick, database schema which includes everything you want and is powerful enough for your indexing needs. (Bear in mind that much of this will be highly specific to your application. You'll want to optimize this to your situation, and ensure set it up as well as you can at the outset.)

Divide and Conquer!

If you do decide to go down the route of doing this 'in-house'. Your design needs to have scalability borne from day 1.

This is one rare case in which the task can be broken down and done in parallel.

If you have 10K documents, even if you built and deployed 10x (scanners + servers + custom app) that would mean each system would only need to handle around 1k documents each.

The challenge would be to make it a cheap and reliable 'turn key solution'.

The application side is probably the easier element, so long as you have a good automated update system designed from the start, you could then simply add hardware as you expand your 'farm/cluster'.

keeping your design modular (i.e. use commodity cheap hardware), will allow you to mix and match hardware/ replace on demand without impacting on daily throughput.

Trial initially to have a turn key solution that can sustain easily 1,000 documents. Then once this works flawlessly scale it up!

Good luck!

Edit 1:

Ok here is a more detailed answer to each specific points you have raised:

What are those systems that are used to scan checks and mail and they read really messy hand writing really well?

One such system as used by the mail/post delivery company 'TNT' here in the UK is provided by a Netherlands based company 'Prime Vision' and their HYCR Engine.

I highly suggest you contact them. Handwritten recognition is never going to be very accurate, OCR on printed characters can sometimes achieve 99% accuracy.

has anyone had experience building a database with a bunch of OCR'd searchable documents? What tools should I use for my problem?

Not specifically OCR'd documents, but for one of our clients, I build and maintain a very large and complex EDMS which holds a very large variety of document formats. It is searchable in multiple different ways whith complex set of data permission access.

In terms of giving advice, I'd say a few things to bear in mind:

- Keep documents on file and have a link in the database

- Store document directly in Database as BLOB data.

Each approach has its own set of pro's and con's. We opted to go the first route. In terms of search-ability, once you have the meta data of the actual documents. It is just a matter of creating custom search queries. I built a rank based search, it simply gave a higher ranking to those that matched more of the tokens. Of course you could use of the shelf searching tools (library) such as the Lucene Project.

Can you recommend the best OCR libraries?

yes:

- tessnet

- tesseract (same as above but for .NET)

- OCROPlus Google Sponsored

As a programmer, what would you do to solve this problem?

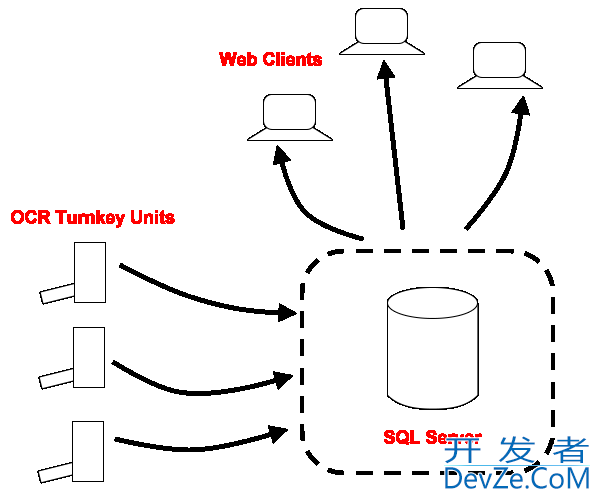

As described above, please see diagram below. The heart of the system will be your Database, you will need to have a presentation front layer to allow clients (could be web application) to search documents in your database. The second part will be the Turnkey based OCR 'servers'.

For these 'OCR Servers' I would simply implement a 'drop folder' (which could be a FTP folder). Your custom application could simply monitor this drop folder (Folder Watcher Class in .NET). Files could be sent directly to this FTP folder.

Your custom OCR application would simply monitor the drop folder and upon receiving a new file, scan it generate the meta data and then move it to a 'Scanned' folder'. The ones that are duplicates or failed to scan can be moved to their own 'Failed Folder'.

The OCR application would then connect to your main Database and do some Inserts or updates (this moves the META DATA to the main database).

In the background you can have your 'Scanned Folder' synchronized with a mirrored folder in your database server (your SQL server as shown in the diagram) (This then physically copies your scanned and OCR'd document to the Main server where the linked records has already been moved.)

Anyway that's how I'd tackle this problem. I've personally implemented one or more of these solutions so I'm confident this would work and be scale-able.

The scale-ability is key here. For this reason you may want to look at alternative database other than the traditional ones.

I would recommend that you at least think about NoSQL type database for this project:

E.g

- Cassandra

- HyperTable

- CouchDB

Un-ashamed Plug:

Of course for £40,000 I'd build and set up the whole solution for you (including hardware) !

:) I'm kidding SO users!

EDIT 2:

Note the mention of META DATA ,by this I mean the same as others have alluded to. The fact that you should retain the original copy of the scanned as an image file, along with the OCR'd meta data (such that it can allow for text searching).

I thought I make this clear, in case it was assumed that it was not part of my solution.

You are currently solving the wrong problem, and 300K is peanuts, as others have already shown. You should focus on eliminating the 10K pages a day you receive now. The other problem just takes a fixed amount of money.

OCR only works reliably for handwriting in very limited domains (recognizing bank numbers, zip codes). The fine results OCR companies advertize with are of printed computer documents in standard formats and standard fonts.

The data entry should not be on paper. Period. Focus on making it so. Push the problem further upfront.

And yes, this is not a programmer problem. It is a management problem.

There's no way you're going to find OCR software that will read handwriting reliably, especially hand writing you'd describe as 'messy.'

You can spend a lot of money on a scanning system, but that's going to get very costly, very quick (at least $15k per high end scanner, plus the cost of the software, training, etc). And without reliable OCR you'd also have to manually key all the data you want to capture from each document. Obviously this will add to your costs significantly (more software, additional employees, etc) not to mention turnaround time from when the new documents are created to when they'd be available to users may not be acceptable for the daily volume you're talking about.

You'd be better off sending all your documents to a company like Iron Mountain. For the volume you're talking about - and assuming the documents you want scanned/keyed aren't overly complex - I'd be surprised if you couldn't get a better price than $.08 per page.

Such a company can deliver your images and data for import into some sort of document management software, or you can write your own app.

update

using @eykanal idea as a starting point

examples of meta data that you would store would be a document id, a location for the source image and something to look up the record by (patient id, ssn or name etc). The 'record locator' data will probably need to be keyed in by data entry clerks looking at the physical form when they scan it.

original:

- Not sure about what the check readers are called, but (at least for checks) they are only looking for numbers, so with such a restricted set of characters, they are much more accurate than general OCR is.

One thing to think about:

Take 10 seconds as an approximate per page time to scan.

Then 10,000 * 10 / 60 /60 = ~27.8 hours to scan your daily intake.

That means more than three full time employees JUST for scanning every day. That may be fine with you and your employer, but I would guess it is cheaper to outsource the scanning. Even 3 low pay employees combined after benefits etc is going to be > 100k / year.

Also:

In past experiences with xerox doc scanners, they resulted in about 50-100k of image data per page, depending on settings and not including the OCR text. Considering you are talking about medical records, you are probably going to need to store those as well (I can imagine there being legal issues if you don't). That means from 200 - 400 gigs for what you have, plus 1/2 to 1 gig per day.

OCR-ing doctors' notes can't be easy :D

Try to figure out which of those 4M pages is immediately needed, and hire Iron mountain for those ones.

As for the rest, let your client know that you've been given a somewhat unfeasible task, and try to come up with a practical solution -- maybe they can just input a small portion of those papers and rely on statistics?

For the future, if you can format the information into multiple choice, something like Scantron might be an affordable solution.

In my opinion the biggest problem is to get papper digital.

Once you have images I can think of two solutions (or better ideas).

Write an Application (not a Webapp!!!) which shows the images one by one to secretaries. The secretaries tag the images an a reference to the image an the tags are stored at a database. The UI must be very well designed (not loading time, auto guessing feature...) to get as much working speed as possible.

(my favourite) Use OCR software to scan then images an get searchable text. Then implement a application which built up a tree of the words used in the documents. Each word should have references to the documents it belongs too. Words like (in the an of...) should be excluded from the tree. Then you can search very quickly throw the tree and find the documents. If you want to match groups of words search for every single word and intersect the results. To perform more advance search throw the hole text I would recommend a modified DFA version which can process one character of data using only cheap instruction like table lookup (very advanced, I know it because of my interest in compiler design)... it should be possible to scan throw the hole textdata (at GB level) in acceptable time...

These are just suggestions!!!!! I just thought about it... Maybe there is something useful!

The best OCR software I have ever seen in my life is called ABBYY: http://www.abbyy.com/company

I have their software and use it at home for work related projects. It will scan documents, even documents that have graphics, such as logos and checkboxes, etc, and convert the resulting document to either Microsoft Word or PDF. Those are the most common exports. Whatever it comes across that it can't convert to text (like a logo for example), it will simply create a graphic image and place it in the document.

As far as how the post office does this, they use special OCR software (probably ABBYY) that can recognize hand writing: http://en.wikipedia.org/wiki/Remote_Bar_Coding_System

ABBYY also has an SDK, so if you would like to write your own application and integrate OCR into it, you can do that too!

Like others have already suggested, your situation is pretty much a standard ECM (enterprise content management)/archiving problem.

This is usually handled by using a "scanning platform" (depending on volume, the big ones are probably going to be something like EMC² Captiva or Kofax, or they can be done off-site as you have already indicated) to scan the paper documents and store the digital documents in a repository of some sort. This repository is traditionally an ECM platform such as Documentum (EMC²), FileNet (IBM), OpenText, ... These platforms will then offer you all kinds of features to use in conjunction with your digital documents, including full text search. Of course, all of the above has a price tag.

To give you my opinion on your specific questions:

- What are those systems that are used to scan checks and mail and they read really messy hand writing really well?

Well any scanning solution will do. I'm not an expert on scanning, but I doubt any of these solutions will yield good results on hand writing.

- has anyone had experience building a database with a bunch of OCR'd searchable documents? What tools should I use for my problem?

Nope. But this is what the ECM repositories will handle for you. There are alternatives, most notably Apache Lucene (http://lucene.apache.org) in the Java world.

- Can you recommend the best OCR libraries?

As mentioned before, the only OCR library I know of that yields somewhat decent results is ABBYY.

- As a programmer, what would you do to solve this problem?

If you do not need ECM, and you're confident that in the future you won't need the extra features provided by an ECM platform, then it's worth looking into building something custom. It's unlikely that this will be easy and straightforward, so you'll have to invest a lot of time designing it, and keep in mind that keeping something like this scalable will be no easy task.

Free bootable OCR server: http://www.watchocr.com/

As featured on slashdot: http://linux.slashdot.org/story/10/07/22/1852234/Open-Source-OCR-That-Makes-Searchable-PDFs

Worth a shot at least.

![Interactive visualization of a graph in python [closed]](https://www.devze.com/res/2023/04-10/09/92d32fe8c0d22fb96bd6f6e8b7d1f457.gif)

加载中,请稍侯......

加载中,请稍侯......

精彩评论